EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

Facebook Inc. announced in March that it was exploring ways to use artificial intelligence to help suicidal users, and today the social network revealed several ways that it has been using its AI to prevent suicides.

Guy Rosen, vice president of product management at Facebook, said in a statement that it’s important to spot signs quickly that a user is considering suicide so that he or she can get help as soon as possible, but it would be impossible for Facebook to manually monitor all of its more than 2 billion users.

That’s why the company uses AI to detect posts and live videos where a user is expressing thoughts of suicide. Using pattern recognition, Facebook’s AI looks for a wide range of indicators on a post or video, including comments by users asking “Are you OK?” or “Can I help?”

In addition to detecting signs of suicidal thoughts, Facebook also uses AI to evaluate reports of potentially suicidal content that have sent by other users. Facebook’s AI prioritizes these reports so that its Community Operations team can review more serious threats first and alert first responders if necessary. “We’ve found these accelerated reports — that we have signaled require immediate attention — are escalated to local authorities twice as quickly as other reports,” said Rosen.

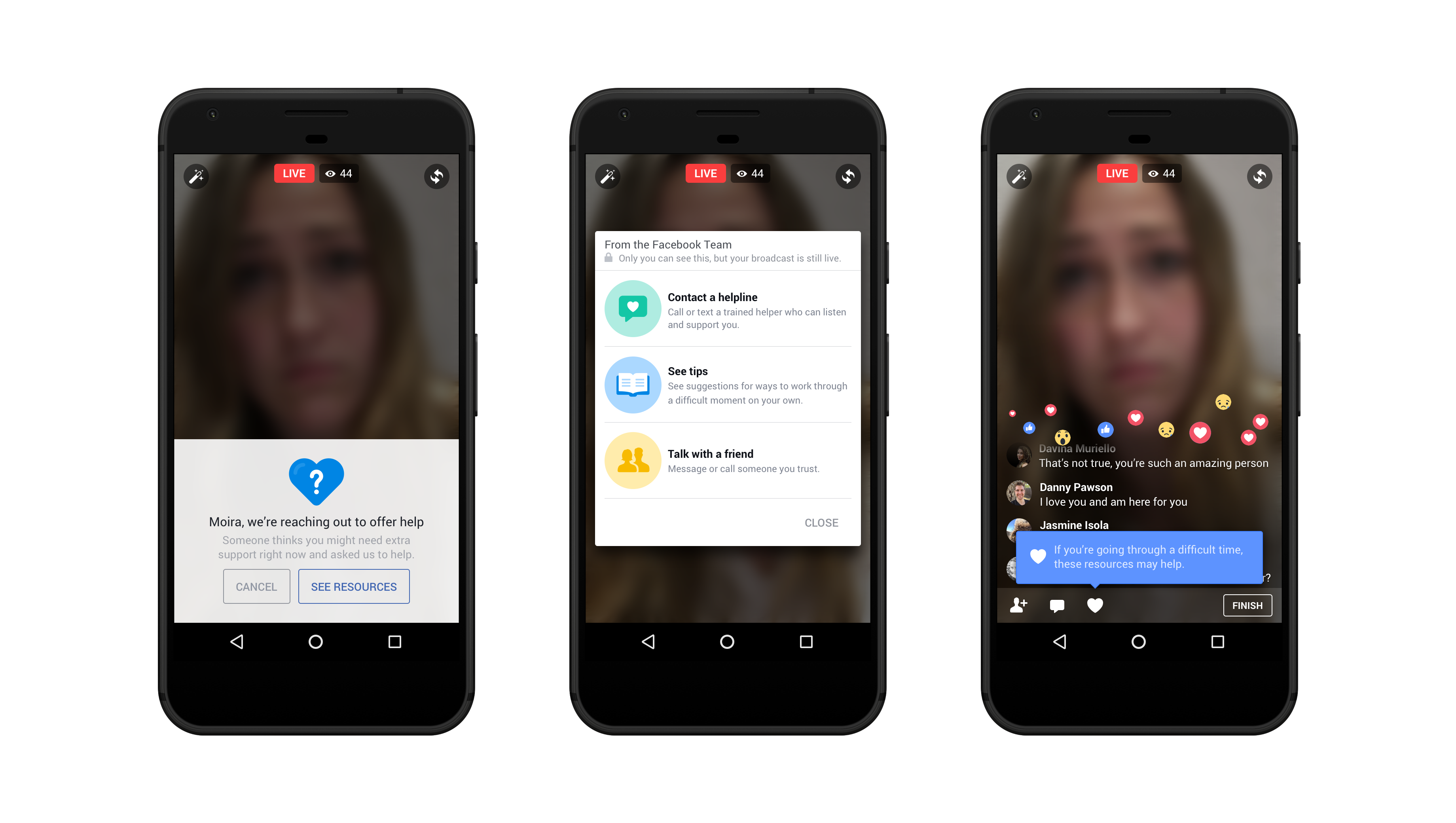

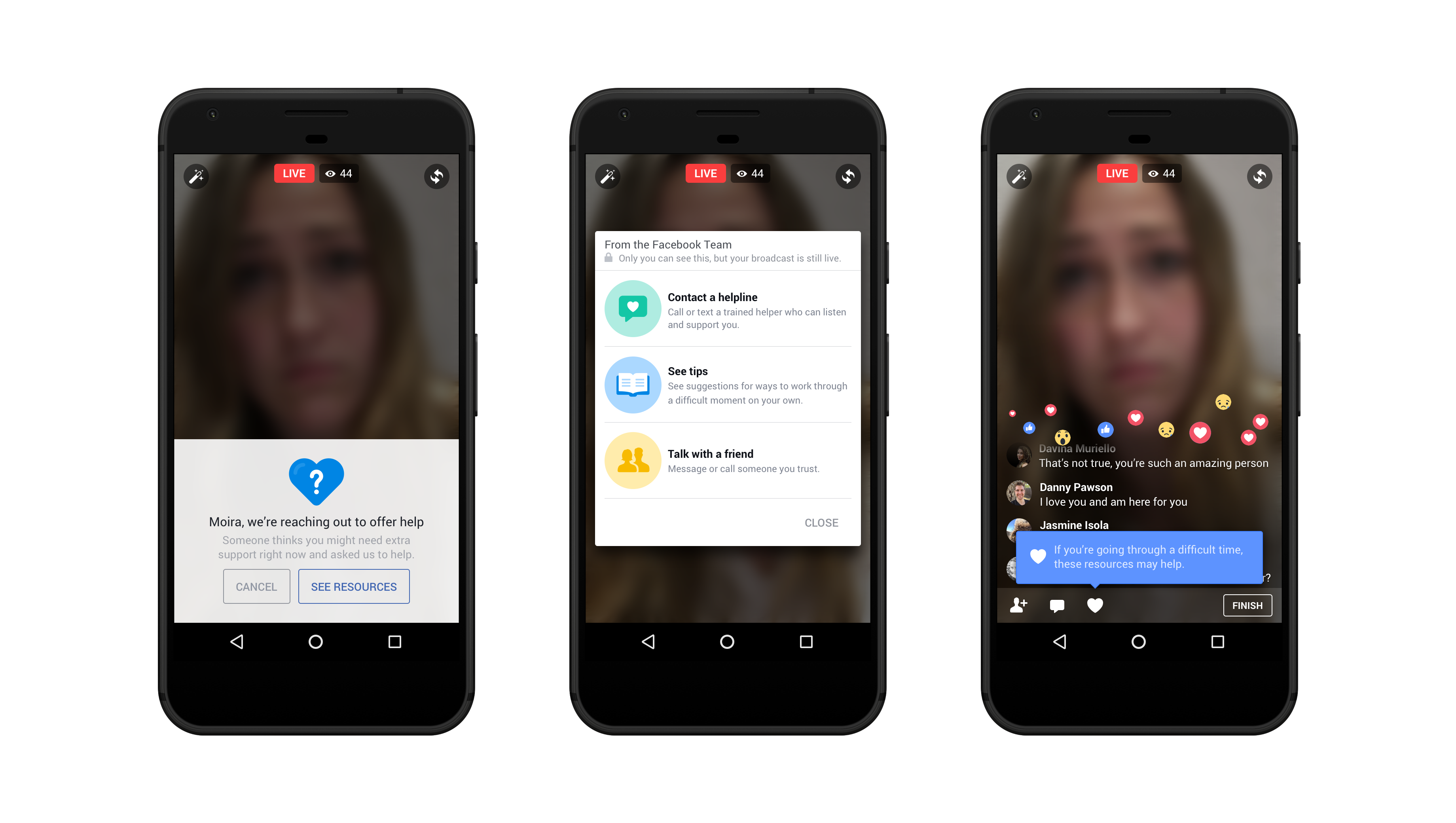

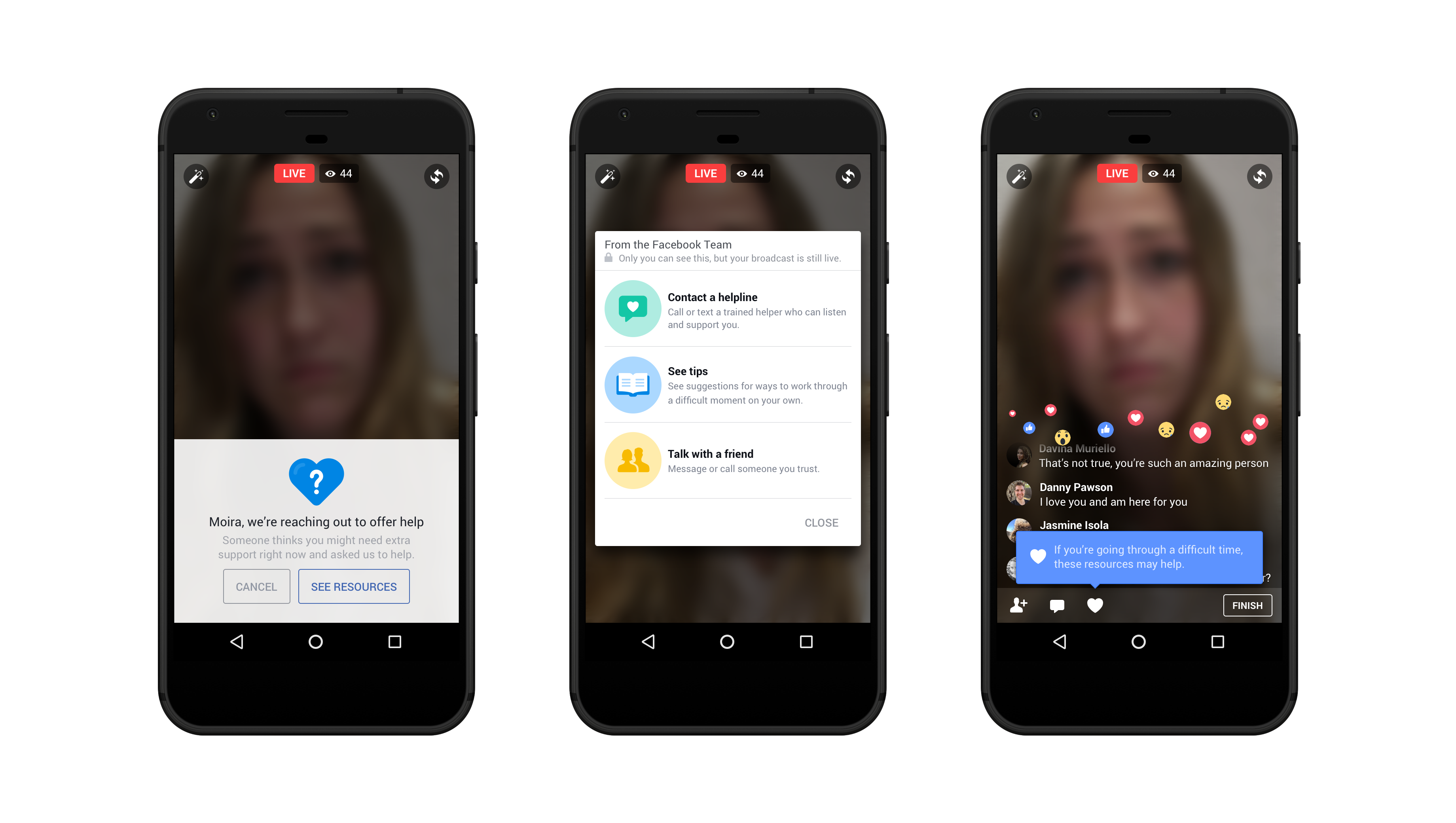

Not all posts detected by Facebook’s system require immediate intervention from local authorities, but the social network also has other systems in place to help users get help. In 2015, Facebook introduced some features that make it easier for users who have been flagged for signs of suicide to seek help. The platform does this by asking if they would like to reach out to a friend for support or to contact a suicide help line. Facebook also provides links to tips on dealing with depression and suicidal thoughts.

Although preventing suicide is obviously a noble goal, Facebook’s project has a few worrying implications. After all, the social network’s AI is effectively policing users’ mental health, which could have long-term effects on their lives when it leads to contact with the authorities. It also raises the question of what else Facebook might do with this technology in the future, if it is not using it for other purposes already.

Alex Stamos, Facebook’s chief security officer, dismissed concerns about Facebook’s AI in a tweet, saying, “The creepy/scary/malicious use of AI will be a risk forever, which is why it’s important to set good norms today around weighing data use versus utility and be thoughtful about bias creeping in.”

According to Rosen, Facebook’s proactive detection system has already led to more than 100 wellness checks by first responders, and the company plans to continue investing in its pattern recognition technology, making it more accurate and reducing the risk of false positives.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.