AI

AI

AI

AI

AI

AI

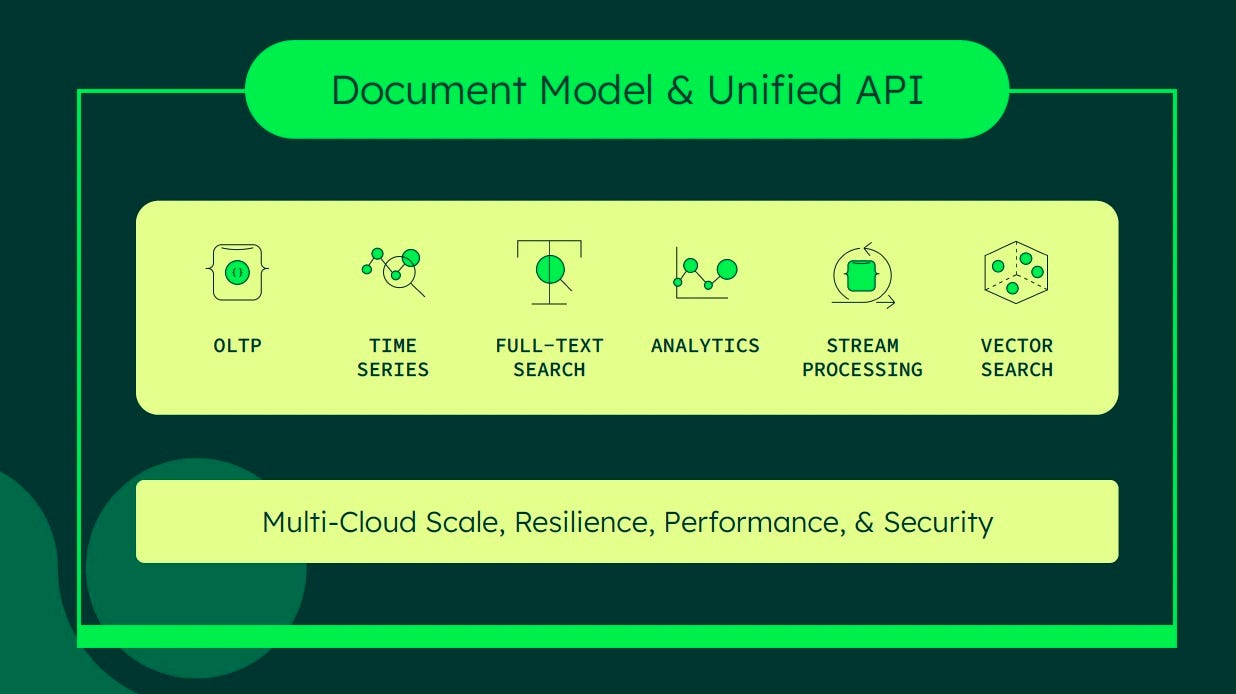

What is cool is a unified ecosystem that supports generative artificial intelligence use cases on the same stack and supports traditional online transaction processing and online analytical processing use cases. In other words, it avoids organizations having to maintain separate stacks for structured data and unstructured, for batch and streaming, and for transactional and analytical. It also puts them on the road to a simplified cost-effective data infrastructure that is easy to manage and grow. On top of that, it provides ironclad data security and governance across a hybrid multicloud deployment footprint.

MongoDB Inc. has been a frontrunner in the non-relational databases with more than 45,000 customers. Like many other vendors, it too announced its generative AI capabilities in the summer of 2023. However, as its customers moved faster than expected to leverage these capabilities, MongoDB accelerated its own development and made its Atlas Vector Search generally available today.

This document evaluates MongoDB Vector Search against the author’s own Vector Database Evaluation Criteria.

What stands out in MongoDB’s new offering is its name, Atlas Vector Search. You’ll notice that it’s not called a vector database, which is a smart move because vector storage is the means to build and deploy generative AI apps and not a destination itself. So, while MongoDB stays true to its roots as a developer platform by providing both of these capabilities, it chose to name its offering after the end goal: Vector Search.

MongoDB is also making a distinction that developing gen AI apps is no longer a task relegated to the hard-to-find data scientists, but rather it has been “shifted left” into the domains. The concept of shift left has gained traction for data teams all year long. Yours truly wrote about it in the context of data security, quality and observability. Now, it is being applied to AI as well.

For a recap, here is the evaluation criteria:

Since the vector search capability is built as an extension to its time-tested underlying database, it leverages many of the benefits inherent in the underlying system. We will evaluate each criterion next.

MongoDB combines lexical and semantic searches in the same query application programming interface call, because the vector embeddings for its attributes are stored within the document. Atlas Vector Search augments existing Lucene-based Atlas Search via a single query and a single driver client-side, so it reduces dependencies for developers. Vector Search also provides additional context to LLMs to reduce hallucinations and brings in private data at inference time.

Like full-text search, Vector Search is now available in Atlas, alongside capabilities like transactional processing, time series analytics and geospatial queries. This enables business use cases such as recommendation & relevance scoring, feature extraction, image search, Q&A systems and, of course, chatbots and the like.

MongoDB Atlas Vector Search powers the well-known retrieval-augmented generation, or RAG, pattern. According to this pattern, the vector store is first used to filter and create a relevant context using search indexes based on approximate nearest neighbor, or ANN, libraries before sending a prompt to an LLM to fulfill users’ requests.

This process addresses the limitation of LLMs that have a limited context window. Also, by pre-filtering, user requests are more tightly bound, which reduces latency, cost,and improves the quality of generated output. How good the quality of prompt is goes a long way in reducing LLM hallucinations. The new $vectorSearch aggregation stage is also used in an monitoring query language or MQL statement to pre-filter documents.

Standalone vector databases have arisen in recent years to store embeddings for primarily unstructured data so that similarity searches can be performed on them. This ability unlocks deep intelligence from text, PDFs, images, videos, and the like. However, they need to integrate with other enterprise databases that have existing corporate data to deliver deeper insights.

MongoDB adds vector embeddings as an attribute inside its documents, which are stored natively inside your BSON documents using arrays. This allows MongoDB to store embeddings for all structured and unstructured content, alongside the enterprise data.

The embeddings are created using an embedding model, such as OpenAI’s text-embedding-ada-002 and Google textembedding-gecko@001. MongoDB Atlas then indexes the embeddings using the Hierarchical Navigable Small World, or HNSW, algorithm to provide an ANN vector search.

With the introduction of Atlas Search Nodes, users can now scale their memory-intensive vector search workload independently from their transactional workload. The indexes live on the new Search Nodes and can be scaled independently from the transactional cluster infrastructure. This separation helps provide better performance and high availability.

Gen AI is a hotbed of new companies and approaches. Consequently, one very important evaluation criteria is how well a database vendor’s vector capabilities are integrated into this ever-expanding ecosystem of model providers as well as independent software vendors developing the integration, chaining and operations mechanism.

MongoDB Atlas Vector Search is able to use embedding models from OpenAI, Cohere and open-source ones deployed at Hugging Face. LangChain, LlamaIndex and Microsoft’s Semantic Kernel have arisen as important players that help glue together many of the moving parts of RAG. MongoDB has integrations with all of them.

In the high-dimensional vector space, ANN techniques such as HNSW compare embeddings pertaining to user requests against stored embeddings to find a specified number of the nearest neighbors. MongoDB has added tuning parameters called, “numCandidates” and “limit” to tune the accuracy of the ANN algorithm.

MongoDB Atlas clusters can scale vertically by adjusting the tier of the cluster as well as horizontally through the use of sharding. Furthermore, with the introduction of Search Nodes, customers can even decouple their Search and Vector Search workloads from their database workloads to scale them independently. Lastly, Atlas clusters are available in any of the major hyperscalers: Amazon Web Services, Microsoft Azure and Google Cloud Platform.

Organizations are keen to contain the overall cost of the data ecosystem, in other words, the total cost of ownership (TCO). A multi-function data developer system that is enhanced by vector search avoids the cost of procuring and integrating specialized and disparate solutions. It also improves consistency of the data pipeline and lowers the cost of debugging and maintenance.

Also, Vector Search is supported directly on top of the cluster and consumes resources in the same way that your cluster consumes resources, meaning no additional billing model is needed.

MongoDB Atlas Vector Search leverages the underlying managed platform’s automatic patching, upgrades, scaling, security and disaster recovery capabilities. Additionally, Atlas’ high availability and automatic failover are multicloud, multiregion and multizone.

Deployment automation via continuous integration and delivery or CI/CD is supported through partners such as HashiCorp and AWS CDK.

Atlas Vector Search inherits MongoDB’s security credentials. By avoiding movement of data from a corporate database to a separate vector database, unnecessary security tasks can be avoided as well. Also, MongoDB extends its transactional consistency for operational workloads into the vector space by leveraging the metadata in the creation of embeddings. This, in turn, helps reduce hallucinations.

Fine-grained access control, separation of duties, encryption, auditing and forensic logs are some of the capabilities that Vector Search inherits from the underlying system. Additionally, you can take advantage of core Atlas capabilities like Queryable Encryption alongside your Vector Search workloads to prevent the inadvertent leakage of sensitive data such as personally identifiable information into model training or inference.

MongoDB’s unified query API across services — ranging from Create/Read/Update/Delete or CRUD operations on transactional data to time series to vector search — helps improve developer productivity by avoiding potential context switching that would be incurred if each service were offered by disparate databases, query languages and drivers.

By virtue of Atlas’ managed services, the vector search leverages the ease of use of the existing document database. This makes it easy to build, deploy and maintain apps.

In addition, developers can test gen AI applications locally with the Atlas CLI using their favorite IDE such as VSCode or IntelliJ before deploying them in the cloud.

MongoDB’s vector search capability is not a separate SKU. The figure below shows how it extends the overall scope of MongoDB Atlas.

RAG is a useful way of asking large language models to generate responses but use only your proprietary data instead of public data that was used to train the models. MongoDB Vector Search allows RAG architectures to utilize semantic search in order to bring back relevant context for user queries to reduce hallucinations, bring in real-time private company data, and remove the training gap where the large language model is out of date.

Although in its November 2023 announcement OpenAI refreshed GPT-4’s training corpus from September 2021 to April 2023, organizations still want to be able to leverage their most current data. MongoDB Vector Search provides the platform to develop and deploy generative AI applications on most up-to-date corporate data. MongoDB lists its vector search capability as being used for conversational AI with chatbots and voicebots, co-pilots, threat intelligence and cybersecurity, contract management, question-and-answers, healthcare compliance and treatment assistants, content discovery and monetization, and video and audio generation.

Sanjeev Mohan is an established thought leader in the areas of cloud, modern data architectures, analytics and AI. He researches and advises on changing trends and technologies and is the author of “Data Product for Dummies.” Until recently, he was a Gartner vice president known for his prolific and detailed research, while directing the research direction for data and analytics. He has been a principal at SanjMo for more than two years, providing technical advisory to elevate category and brand awareness. He has helped several clients in areas like data governance, generative AI, DataOps, data products and observability.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.