INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Generative artificial intelligence models will become more powerful than ever, thanks to a new, more advanced architecture for graphics processing units announced by Nvidia Corp. today.

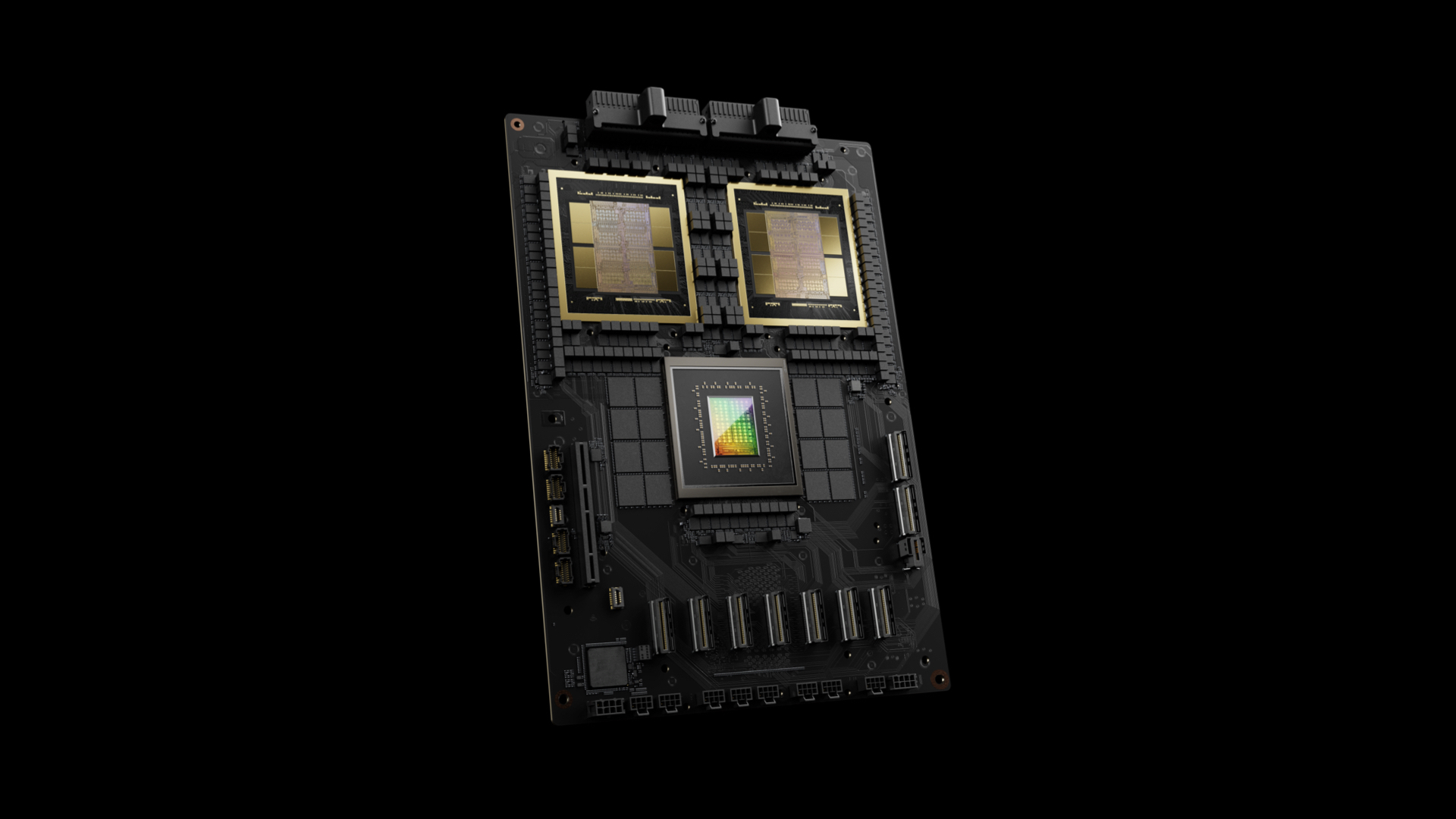

The company unveiled its all-new Blackwell GPU architecture (pictured) at its GTC 2024 event today, saying it’s based on six transformative new technologies that will bring accelerated computing to the next level. With the new Blackwell GPUs, companies will be able to run real-time generative AI powered by more expansive, 1 trillion-parameter large language models, with an impressive 25-times lower cost and power consumption than Nvidia’s existing H100 GPUs, based on the older Grace Hopper architecture.

Besides accelerating generative AI, Nvidia promised that the Blackwell GPU architecture, which succeeds Grace Hopper, will unlock new breakthroughs in data processing, engineering simulation, electronic design automation, computer-aided drug design and quantum computing. The Blackwell architecture is named in honor of David Harold Blackwell, a renowned American statistician and mathematician who made significant contributions in areas such as game theory, probability theory, information theory and statistics.

Blackwell’s six new technologies include a custom-built four-nanometer manufacturing process developed by Taiwan Semiconductor Manufacturing Co. Ltd. This process will form the basis of its next-generation GPU, which features two-reticle-limit GPU dies connected by a 10-terabyte-per-second chip-to-chip link, creating a single, unified GPU, the company explained.

Also new is Nvidia’s second-generation Transformer Engine, which adds micro-tensor scaling support and dynamic range management algorithms integrated within the Nvidia TensorTT-LLM and NeMo Megatron frameworks to support larger computing algorithms and AI model sizes with four-bit floating point AI inference.

The chips incorporate the fifth generation NVLink network switch to provide up to 1.8 terabits per second of bidirectional throughput per GPU. It provides faster communications among up to 576 GPUs in one node that can power more complex LLMs than previously possible.

In addition, the Blackwell GPUs will be the first such chips to feature a dedicated engine for reliability, availability and serviceability, thanks to the incorporation of a new RAS Engine. They also add new capabilities at the chip level to support AI-powered preventative maintenance, enhancing diagnostics and reliability. This will result in greater system uptime, allowing large-scale AI deployments to run uninterrupted for up to months at a time, Nvidia said.

Blackwell also introduces more advanced confidential computing capabilities to protect AI models and their data, meaning they become a more realistic proposition in privacy-focused industries such as healthcare and financial services. Finally, Nvidia revealed a new Decompression Engine designed to accelerate database queries for AI models, data analytics and data science.

The new architecture forms the basis of the new GB200 Grace Blackwell Superchip (below), which integrates two Nvidia B200 Tensor Core GPUs with the Nvidia Grace central processing unit over a powerful, 900-gigabits-per-second ultra-low-power NVLink interconnect.

The GB200 Grace Blackwell Superchip also features Nvidia’s newest Quantum-X800 InfiniBand networking switches. Quantum-X800 InfiniBand is said to be the first networking platform that can achieve 800 gigabits per second throughput, and this will enable it to push the boundaries of AI and other high-performance computing workloads by linking more GPUs together.

Gilad Shainer, Nvidia’s senior vice president of networking, said the Nvidia X800 switches will enable the first-ever trillion-parameter generative AI models when they become available on Microsoft Azure, Oracle Cloud Infrastructure and other platforms later.

The company explained that the GB200 Grace Blackwell Superchips will be a key component of its new Nvidia SuperPOD DGX GB2000 NVL72 platform (below), which is a multinode, liquid-cooled rack-scale system for compute intensive workloads. It will combine up to 36 GB200 Grace Blackwell Superchips, including 72 Blackwell GPUs and 36 Grace CPUs, as well as BlueField-3 data processing units.

It enables them to run as if they were a single, more powerful GPU with up to 1.4 exaflops of AI performance and 30 terabytes of rapid memory. All told, this will enable a 30-times performance increase compared with its prior-generation platforms, based on the existing Nvidia H100 Tensor Core GPU architecture.

The latest Nvidia DGX SuperPOD is expected to launch later in the year, but customers should have plenty of choice besides investing in such a platform. At the GTC event, the company said Amazon Web Services Inc., Google LLC and Microsoft Corp. will be among the first to offer access to Blackwell GPUs on their public cloud infrastructure platforms, in addition to Nvidia’s own DGX Cloud service. Alternative options include sovereign cloud platforms such as Indosat Ooredoo Hutchinson, Nexgen Cloud, Oracle EU Sovereign Cloud and the Oracle U.S., U.K. and Australian government clouds, which will also get early access to Blackwell GPUs.

A final option is to acquire one of a host of new servers featuring the Blackwell GPUs from third-parties, including Dell Technologies Ltd., Hewlett-Packard Enterprise Co. Lenovo Group Ltd., Cisco Systems Inc. and Super Micro Computer Inc. Those companies, and others, have promised to debut their first Blackwell GPU-based servers later this year.

TheCUBE Research analyst John Furrier and ZK Research Principal Zeus Kerravala summed up this and other announcements on the first day of GTC:

THANK YOU