NEWS

NEWS

NEWS

NEWS

NEWS

NEWS

Trifacta Inc. today is announcing a version of its namesake data preparation tool designed for non-big data environments.

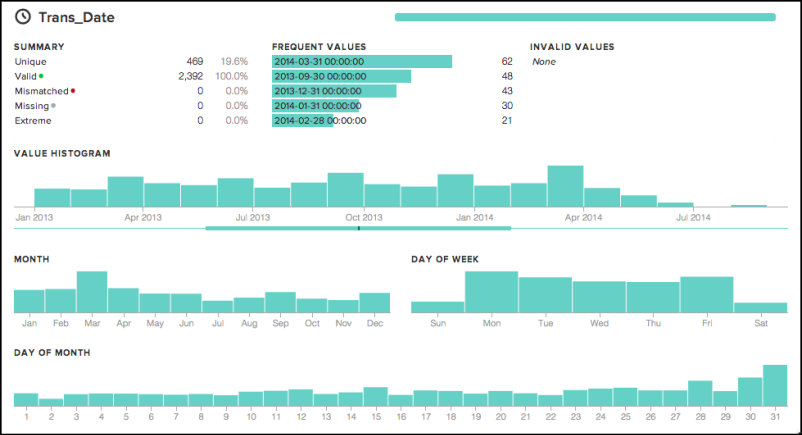

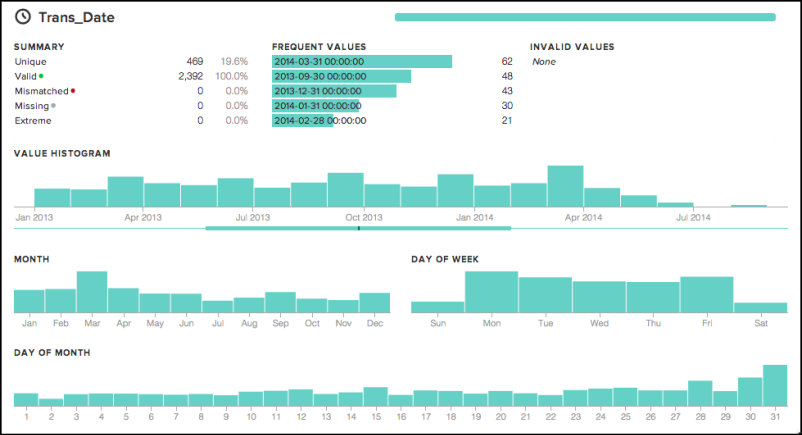

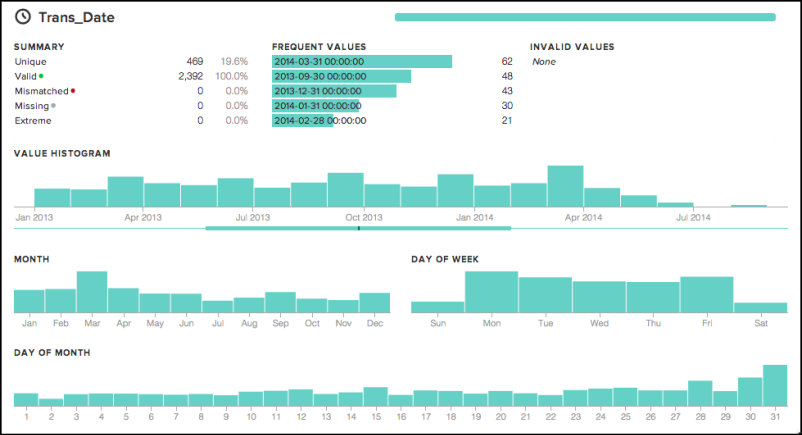

The company, which calls the discipline it enables “data wrangling,” said Wrangler Edge is intended for use by organizations that may not have Hadoop or an enterprise data lake but that still want to do data analysis in business intelligence and visualization tools like Tableau Software Inc.’s. A key feature of Wrangler Edge is the ability for users to visualize data as it’s transformed and imported into an analytics engine (above). “What Tableau did with democratizing visualization we’re doing in the data preparation space,” said Will Davis, director of product marketing at Trifacta.

Big data projects usually involve an “extract/transform/load” process that can be laborious but is a necessary step when data is being combined from multiple sources, including both structured and unstructured formats. “ETL works well when there are well-defined schemas, and those tools are typically managed by IT people,” Davis said. “Where we shine is in working with data from different sources, such as files and cloud.”

Trifacta was the product of joint research at Stanford University and the University of California at Berkeley. A free desktop version proved so popular that the company raised money to commercialize the tool, releasing its first edition in 2012. It says more than 4,000 companies in 132 countries now use Wrangler to explore, transform and join diverse data. Trifacta has raised more than $76 million in venture funding.

Wrangler Edge builds on the free Wrangler desktop edition with support for multiple users, larger data volumes, broader connectivity, cloud and on-premise deployment options and the ability to schedule and operationalize wrangling workflows. The company also sells a high-end enterprise version aimed at more traditional IT deployments running Hadoop.

Wrangler Edge’s visualization features enable users to preview the data they are preparing both to determine its value and to ensure format consistency, Davis said. The tool can read data from both HDFS and external sources such as non-Hadoop data stores and files. “We ingest via an API [application program interface] framework that allows us to build connectors from the sources we connect to as well as the sources we publish to,” Davis added. “It’s an extensible framework.”

Deployment is on an edge server or gateway node. Users can write “recipes” for on-the-fly data transformation of data sets directly in the application. For multi-gigabyte datasets, the software uses a memory-resident framework called Photon to enable transformation. Data can also be transformed by compiling recipes to the Apache Spark and MapReduce parallel processing frameworks. Currently, the product works only with batch data, but the company is considering adding streaming options, Davis said.

Trifacta wouldn’t disclose pricing information.

THANK YOU