AI

AI

AI

AI

AI

AI

Facebook Inc. today introduced a new research dataset and an open-source development module to enable the creation of more capable “embodied” artificial intelligence models.

Embedded AI is a term that usually refers to machine learning models installed on robots. These neural networks directly interact with their environment and often also require the ability to navigate that environment, for example in the context of a warehouse robot built to ferry packages between shelves. Facebook researchers have made major contributions to the field of embodied AI navigation in recent years.

The research dataset the company open-sourced today, SoundSpaces, is designed to help robots find their way around more efficiently by allowing them to analyze environmental sounds. Audio is useful for navigation because it adds context to the visual data a robot collects with its cameras. For example, if a hypothetical robotic home assistant is asked by the user to fetch a ringing smartphone, tracking the sound to its source is a potentially much faster than visually checking every room where the device could be located.

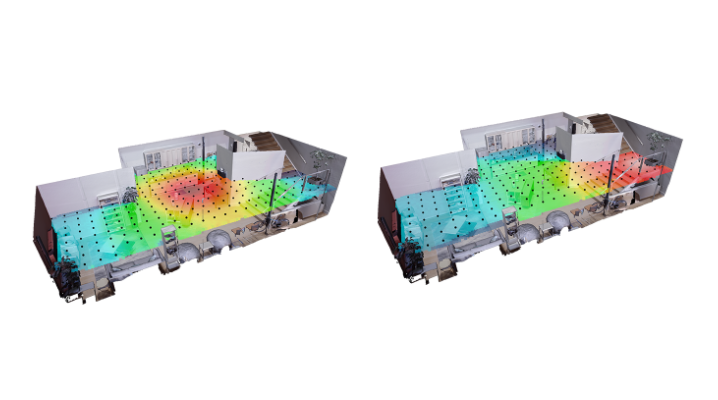

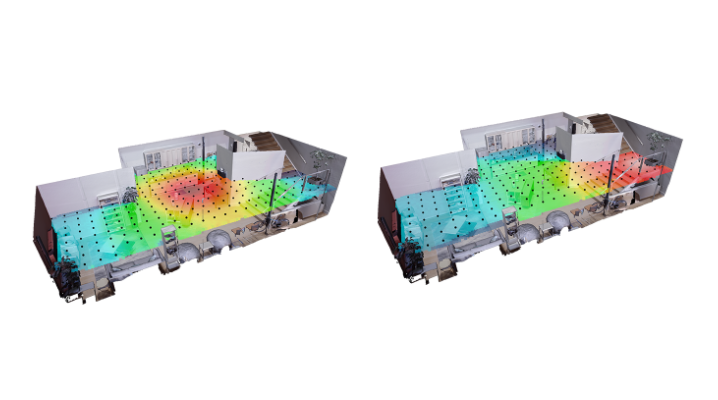

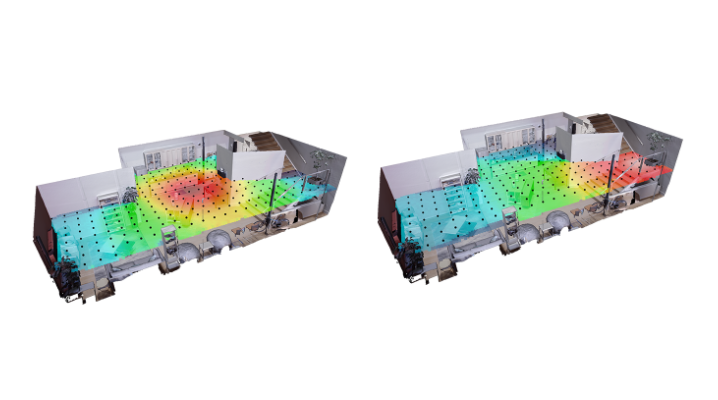

SoundSpaces provides a collection of audio files that AI developers can use to train sound-aware AI models in a simulation. These audio files are not simple recordings but rather “geometrical acoustic simulations,” as Facebook describes them. The simulations include information on how waves reflect off surfaces such as walls, how they interact with different materials and other data that developers can use to create realistic-sounding simulations for training AI models.

“To our knowledge, this is the first attempt to train deep reinforcement learning agents that both see and hear to map novel environments and localize sound-emitting targets,” Facebook research scientists Kristen Grauman and Dhruv Batra wrote in a blog post today. “With this approach, we achieved faster training and higher accuracy in navigation than with single modality counterparts.”

Facebook today also said that it has open-sourced a tool called Semantic MapNet. It’s a software module developers can use to give their models a kind of spatial memory in order to improve navigation.

When AI-powered robots enter a new environment, say a new room, they often create a map to make future trips to that location easier. The effectiveness with which those future trips will be carried out depends in no small measure on the quality of the robot’s map.

Facebook’s Semantic MapNet module promises to improve map quality by making it easier to capture small and hard-to-see objects in an environment, while also enabling AI models to more accurately remember the position of larger objects. The result is more accurate navigation.

“Semantic Mapnet sets a new state of the art for predicting where particular objects, such as a sofa or a kitchen sink, are located on the pixel-level, top-down map that it creates,” Grauman and Batra wrote. “It outperforms the previous approaches and baselines on mean-IoU, a widely used metric for the overlap between prediction and ground truth.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.