INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Not surprisingly, Nvidia Corp. used its GPU Technology Conference last week to announce updates to what it’s doing in the areas of artificial intelligence, supercomputing and autonomous vehicles. But the 2021 version of GTC had a new twist: a much stronger enterprise focus than ever before.

Nvidia, best-known for its graphics processing units and associated software to accelerate graphics, is obviously well-known to the gaming community, developers, scientists and others, but it certainly hasn’t been a household name with enterprise information technology pros. Now the company is trying to change that.

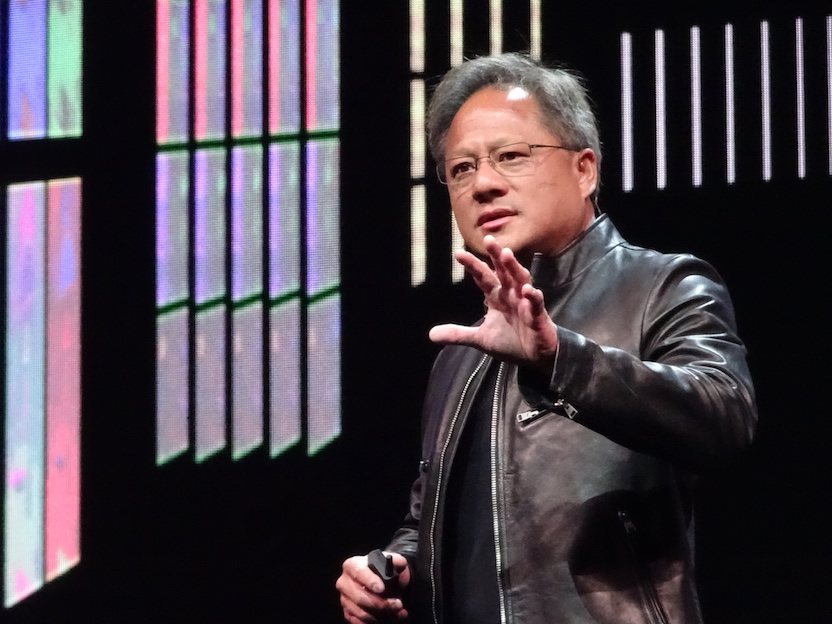

The opportunity for Nvidia has been created by the evolution of the data center. The fundamental tenet of my research has always been that opportunities are created from markets transition and the data center is on the precipice of major change. During his keynote, Nvidia Chief Executive Jensen Huang (pictured) called the data center the “new unit of computing,” which is a topic I’ve written about for several years now.

To understand what he means, consider and old unit of compute: the server. It was a full, turnkey device with integrated processors, memory, network infrastructure, storage and everything else needed to run an application. All of that technology was connected with a physical backplane inside the computer.

Although this worked, resources were used poorly. If server A was out of storage capacity but B was only 5%, you couldn’t move storage. Then things changed. The industry developed technology to extract the physical components and have them located outside the box. In this case, the network becomes the backplane of the distributed server. Now the technology enables us to have an entire data center look like that single unit of compute, and this is the industry shift that Nvidia is working toward.

The evolution of the data center requires new types of infrastructure, which is what prompted the company to purchase Mellanox Technologies Ltd. and Cumulus Networks Inc. The modernized data center required a fast network to act as that backplane. That’s not the end of its enterprise ambitions though. At GTC Nvidia also announced the following:

Nvidia is best known as a GPU company, but it is now jumping into the central processing unit market with the introduction of Project Grace. It’s named after Grace Hopper, a nice shout-out to one of the first female computer scientists. Although Grace is being positioned as a CPU, it’s actually a number of Arm chips linked together using Nvidia’s NVLink technology.

Moore’s Law is reaching its physical limits, and this requires a new way to think about processing. The multiprocessing capabilities of Grace enable it to handle significantly more data than a traditional x86 processor. To be clear, Grace CPUs are meant for accelerated computing and other demanding uses cases. Personal computers and low-end servers will still require CPUs from Intel Corp. and Advanced Micro Devices Inc., but Grace does expose a lack of innovation from them, particularly Intel, which has never cracked the AI market.

Last year, Nvidia debuted is BlueField data processing unit, also known as a DPU. The concept of the DPU is to offload many of the processing intensive tasks from the CPU on the server by having them run on the DPU itself. The cards are a complete mini-server on a card and include software defined networking, storage and security acceleration enabling the server to do more of what it was meant to do.

The new BlueField-3 has been beefed up and has the equivalent of 300 CPU cores and 1.5 trillion operations per second. For applications, such as machine learning, ray tracing, cybersecurity or other computationally intensive tasks, BlueField becomes critical in scaling server capacity.

Although the data center can be thought of as a single unit of compute, there are still requirements for an integrated server. As businesses embark on AI initiatives, they require highly tuned equipment. The challenge is putting all the components together, since most businesses don’t have much experience with AI and machine learning.

For this, Nvidia offers a range of DGX servers, which are full turnkey servers with validated designs, made specifically for the demands of AI. The portfolio starts with DGX Stations, a desktop form factor for individuals or work group use. Customers can purchase them outright or for $9,000 a month in a subscription model announced at GTC.

There are also server form factors for enterprisewide use where the DGX would be a company resource. At the event, Nvidia announced a new version of the high end of the line, DGX SuperPOD, that introduces BlueField-2 DPUs in the server for improved security and workload performance. SuperPOD achieves the same processing capability as many supercomputers by combining multiple DGX systems.

It’s important to note that although customers can purchase the DGX from Nvidia reseller partners, DGX was designed to be a reference design for its storage partners. Businesses can buy DGX systems integrated with NetApp, DDN, VAST Data, WekaIO and other major storage vendors.

Those were the major news items that revolve around enterprise computing, but the company did make other announcements, including its Morpheus security framework and Jarvis conversational AI platform.

Morpheus should prove invaluable to security professionals as protecting data centers has gotten increasingly complex. The number of threats continue to rise as does the volume of data being generated from network and security systems. Threat actors are using AI to launch attacks and it’s unrealistic to expect security pros to combat this with manual methods. Morpheus is an open application framework that provides developers a pre-trained AI pipeline that’s optimized for security.

The conversational AI that Jarvis provides a number of use cases, including transcription, translation, virtual bots and other functions to improve collaboration and customer service. During his keynote, Huang referred to voice AI as the “holy grail” of AI because it’s very difficult to do well. Nvidia has run 60,000 hours of voice through the model in training the model and it’s now 90% accurate. Like Morpheus, this is a platform and developers can refine the models using their own data for vertical use cases.

GTC has long been a favorite event for developers, gamers and the auto industry but Nvidia is now making some serious inroads to the enterprise. I expect over the next few years we will see the number of sessions and attendees that are enterprise-focused continue to grow as Nvidia extends its reach into other areas of business technology.

Zeus Kerravala is a principal analyst at ZK Research, a division of Kerravala Consulting. He wrote this article for SiliconANGLE.

THANK YOU