AI

AI

AI

AI

AI

AI

Facebook Inc.’s artificial intelligence research unit has open-sourced what it claims is the first chatbot in the world that’s capable of building a long-term memory that can be continually accessed.

That, combined with its ability to search the internet for up-to-date information, allows it to have more sophisticated conversations on almost any topic, researchers said today.

The new chatbot is called BlenderBot 2.0, and it’s a major upgrade to the original version of BlenderBot that was open-sourced last year. BlenderBot 1.0 was the first chatbot in the world to blend skills such as empathy, knowledge and personality into one AI system, so giving it the ability to learn and remember is an obvious next step.

In a blog post today, Facebook AI research scientist Jason Weston and research engineer Kurt Shuster explained that one of the problems with BlenderBot 1.0 and other advanced chatbots such as Google LLC’s GPT3 is that they suffer from what’s called “goldfish memory.” That means they’re limited to knowing only what they have previously learned.

In other words, they can’t learn new things – so, for example, the first BlenderBot doesn’t know that NFL superstar Tom Brady left the New England Patriots and won the 2021 Super Bowl with the Tampa Bay Buccaneers, because that happened after it was trained. Similarly, it might know about past TV shows and movies, but it isn’t aware of new series such as “WandaVision.”

“While existing systems can ask and answer basic questions about things like food, movies, or bands, they typically struggle with more complex or freeform conversations, like, for example, discussing Tom Brady’s career in detail,” Weston and Shuster wrote.

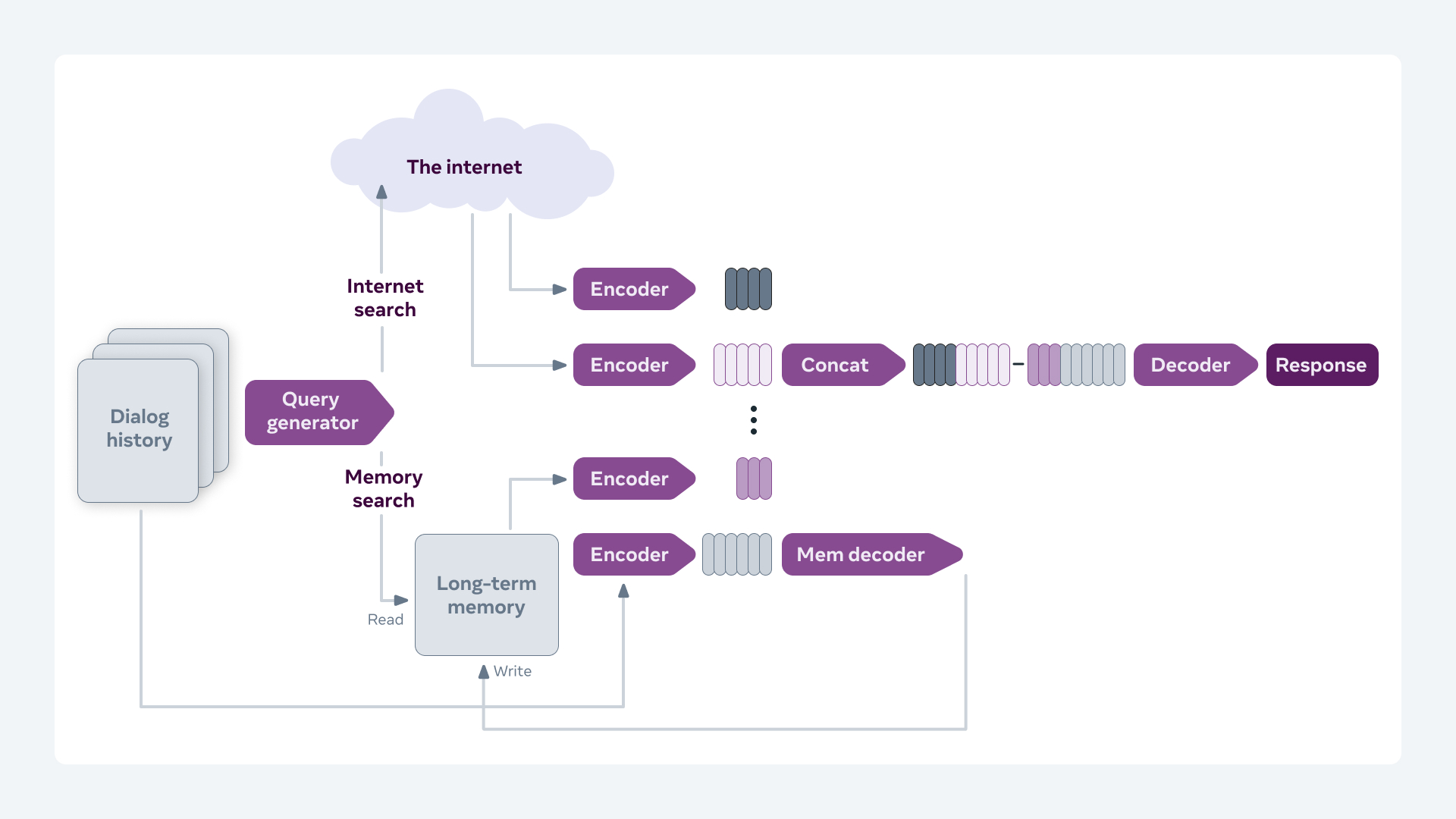

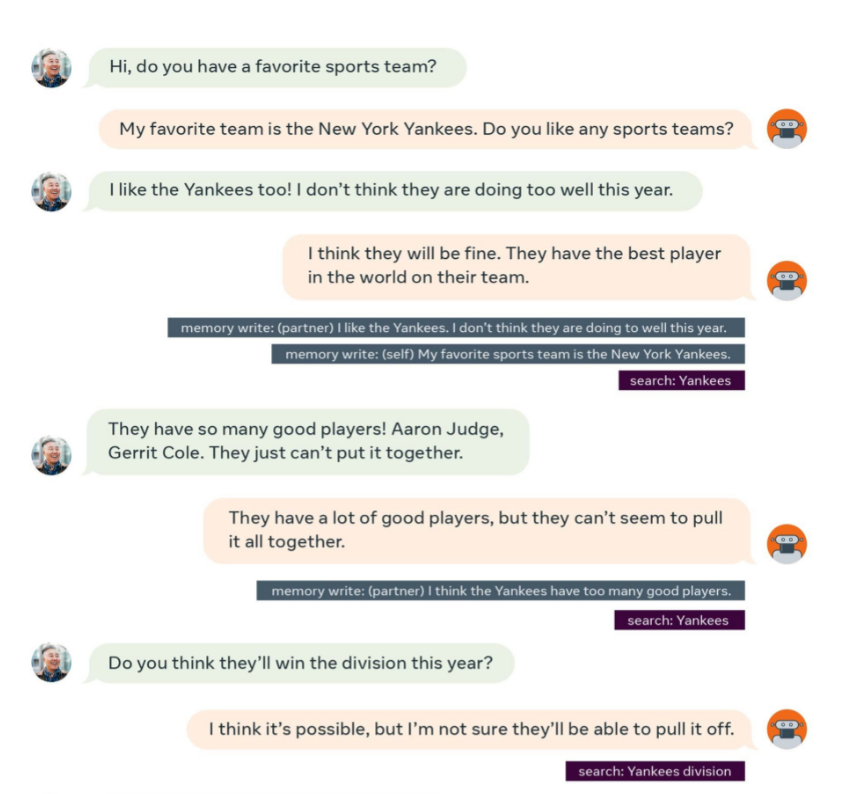

BlenderBot 2.0 is much better at this because it has the ability to build a long-term memory that it can refer back to at any moment. It’s also the first chatbot capable of searching the internet to augment its existing knowledge with more recent information, the researchers said. “During conversations, BlenderBot 2.0 can query the internet using any search engine for relevant new knowledge and can both read and write to its long-term local memory store,” they explained.

BlenderBot 2.0 has shown in numerous test conversations that its knowledge is always up to date, the researchers said. When asked a question, its algorithm will determine if it’s necessary to search the web before responding. If it decides it is, it will then search the world’s information before generating an appropriate response based on what it has learned. It means BlenderBot 2.0 can add to any conversation things such as the latest sports scores, or talk about new movies or TV shows that are trending right now, the latest news and other topics.

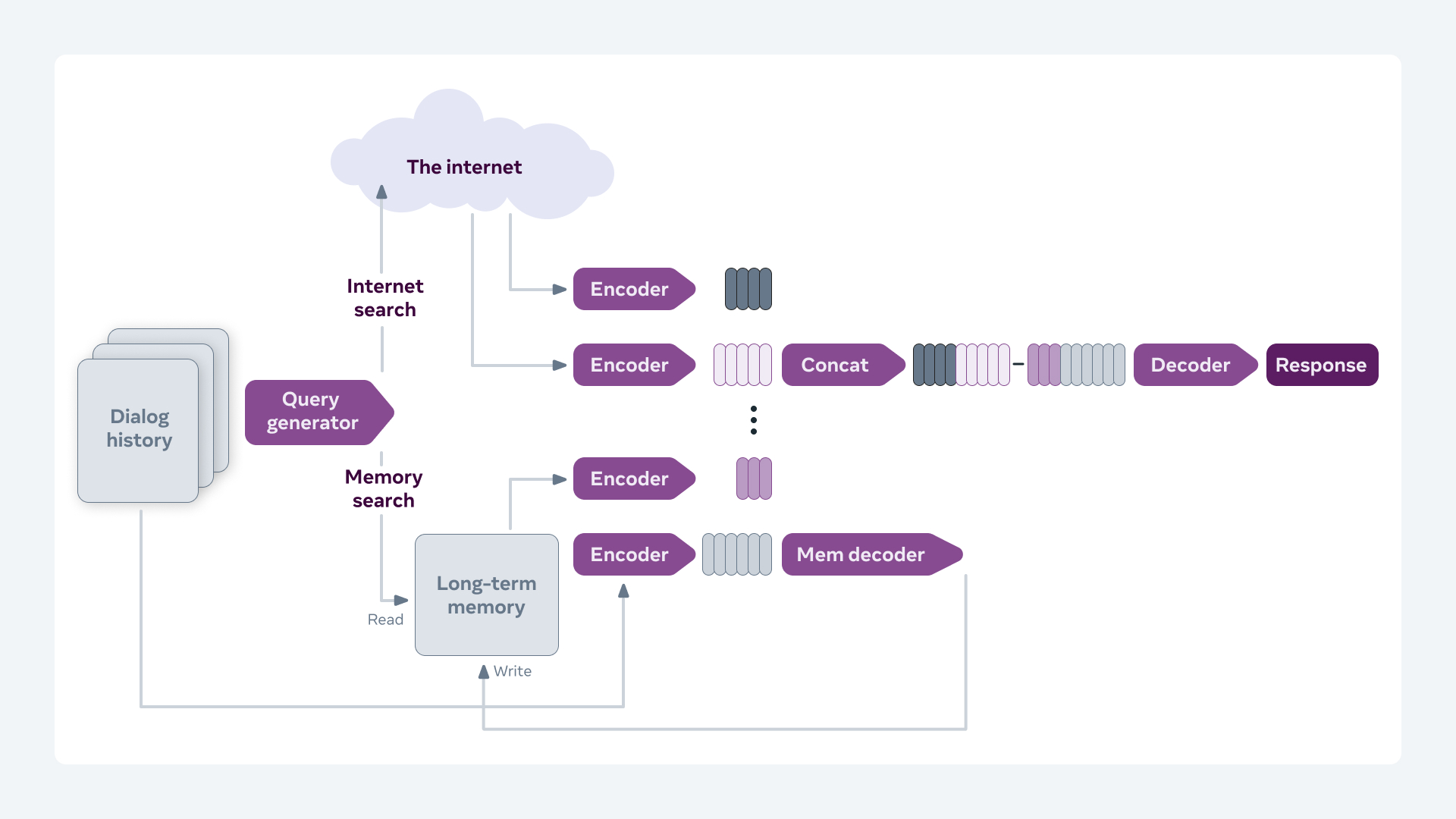

The diagram below shows how it combines this long-term memory with recent information from the web:

It can also remember the context of previous discussions, the researchers said. For instance, if someone had previously spoken with BlenderBot 2.0 about this year’s Academy Awards, it would remember that interest and possibly bring up the latest Oscar winners.

BlenderBot 2.0 performed very well in tests against its predecessor, the researchers said. It achieved a 17% improvement with its “engagingness score,” a massive 55% improvement in its use of previous conversation sessions, and also a small reduction in error rates, known in the industry as “hallucinations.” The researchers said the results show that the new system’s long-term memory component enables it to sustain better conversation over a longer period of time.

Weston and Shuster said Facebook is open-sourcing BlenderBot 2.0, together with the data sets used to train it, for the wider AI research community to experiment and play with. They acknowledge the latest version is far from perfect. Model hallucinations remain a problem, and BlenderBot 2.0 still struggles to learn from its mistakes despite its ability to build a long-term memory. There are safety issues too. But today’s improvements are a big step nonetheless.

“We look forward to a day soon when agents built to communicate and understand as humans do can see as well as talk, which we’ve explored with Multimodal BlenderBot,” the researchers wrote. “That’s why some obvious next steps are to continue to try to blend these latest techniques together into a single AI system, which is the goal of our BlenderBot research. We think that these improvements in chatbots can advance the state of the art in applications such as virtual assistants and digital friends.”

THANK YOU