AI

AI

AI

AI

AI

AI

Cerebras Systems Inc., the developer of a wafer-sized artificial intelligence chip with 2.6 trillion transistors, has raised $250 million in funding to further develop its technology and expand industry adoption.

The funding was provided by Alpha Wave Ventures and Abu Dhabi Growth Fund. Cerebras Systems said in its announcement of the funding round today that it’s now valued at more than $4 billion, up from $2.4 billion in 2019.

Enterprises typically use graphics processing units in their AI projects. The fastest GPU on the market today features about 54 billion transistors. Cerebras Systems’ chip, the WSE-2, includes 2.6 trillion transistors that the startup says make it the “fastest AI processor on Earth.”

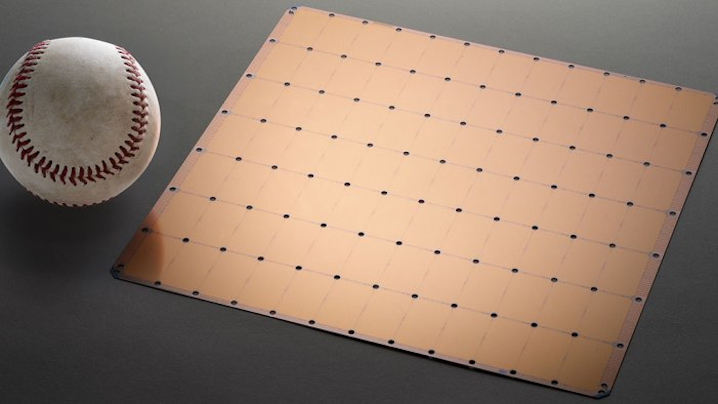

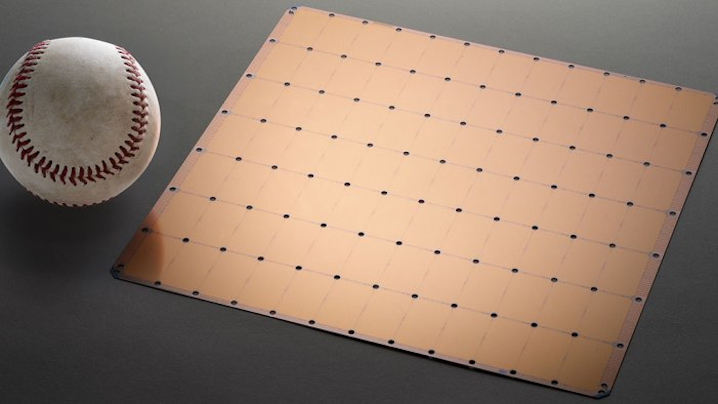

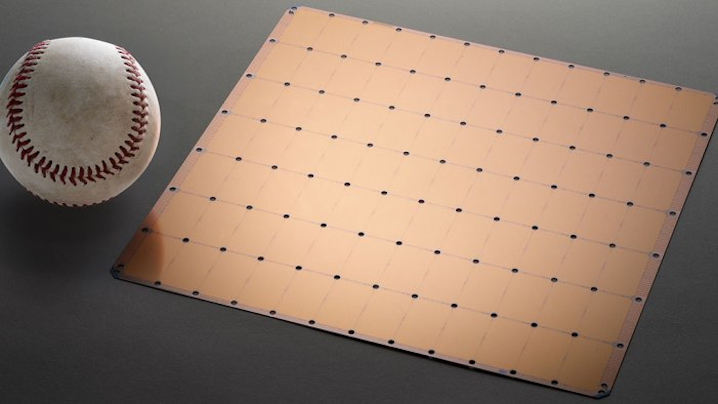

WSE-2 stands for Wafer Scale Engine 2, a nod to the unique architecture on which the startup has based the processor. The typical approach to chip production is carving as many as several dozen processors into a silicon wafer and then separating them. Cerebras Systems is using a vastly different method: The startup carves a single large processor into the silicon wafer that isn’t broken up into smaller units.

The 2.6 trillion transistors in the WSE-2 are organized into 850,000 cores. According to Cerebras Systems, the chip’s cores are optimized for the specific types of mathematical operations that neural networks use to turn raw data into insights. The WSE-2 stores the data being processed by a neural network using 40 gigabytes of speedy onboard memory.

Cerebras Systems says that the WSE-2 has 123 times more cores and 1,000 times more on-chip memory than the closest GPU. The chip’s impressive specifications translate into several benefits for customers, according to the startup, most notably increased processing efficiency.

To match the performance provided by a WSE-2 chip, a company would have to deploy dozens or hundreds of traditional GPU servers. GPU servers in an AI cluster must constantly exchange data with one another to coordinate their work. This process of exchanging data delays computations: Before processing can begin, each GPU must wait until the information it needs to perform a calculation arrives from another server.

With the WSE-2, data doesn’t have to travel between two different servers but only from one section of the chip to another, which represents a much shorter distance. The shorter distance reduces processing delays. Cerebras Systems says that the result is an increase in the speed at which neural networks can run.

Another performance boost is provided by the WSE-2’s 40 gigabytes of onboard memory. Neural networks often store data in external memory because the chip on which they run can’t accommodate all the information. Data must travel between the chip and the external memory to be processed. The WSE-2, in contrast, can store all of a neural network’s data using its onboard memory, which avoids the processing delays associated with information traveling off the chip to an external component.

Cerebras Systems sells the WSE-2 as part of a system called the CS-2. According to the startup, the system can replace dozens or even hundreds of traditional GPU servers. That’s a boon from a hardware deployment standpoint because companies need to set up less infrastructure than a traditional GPU cluster requires, which simplifies administrators’ work.

A CS-2 system costs a “few millions” of dollars, according to AnandTech. The onboard fans and other key components are redundant, meaning that if one of them malfunctions, the system can continue operating. Administrators can replace a malfunction part without incurring any downtime.

Cerebras Systems’ customers include AstraZeneca plc, GlaxoSmithKline plc, Argonne National Laboratory, Lawrence Livermore National Laboratory and many other organizations.

“The Cerebras team and our extraordinary customers have achieved incredible technological breakthroughs that are transforming AI, making possible what was previously unimaginable,” said Cerebras Systems co-founder and Chief Executive Officer Andrew Feldman. “This new funding allows us to extend our global leadership to new regions, democratizing AI, and ushering in the next era of high-performance AI compute to help solve today’s most urgent societal challenges – across drug discovery, climate change, and much more.”

Following the funding round, Cerebras Systems plans to boost its current headcount of 400 to 600 by the end of next year. The startup will place a particular emphasis on hiring more engineers to support product development efforts.

THANK YOU