AI

AI

AI

AI

AI

AI

Cloud-based network detection company ExtraHop Networks Inc. said today it’s helping boost visibility for organizations whose employees are using generative artificial intelligence tools such as OpenAI LP’s ChatGPT.

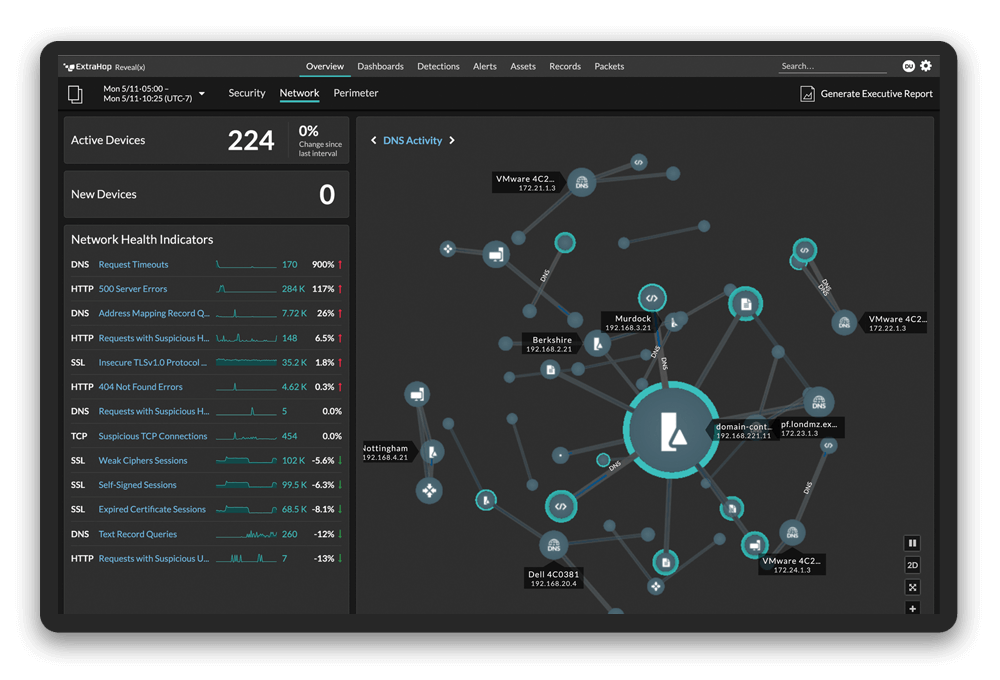

To accomplish that, it announced a new tool called Reveal(x) that helps companies better understand their risk exposure and ensure generative AI is being used in adherence with their AI policies.

In a blog post, ExtraHop explained that organizations have some very real concerns about their employees using AI-as-a-service tools, due to the risk of data and intellectual property leaks that can occur when sharing data with them. ExtraHop says employees may not realize that by sharing information with these tools, they’re effectively putting that information into the public domain.

“The immediate IP risk centers on users logging into the websites and APIs of these generative AI solutions and sharing proprietary data,” the company said. “However, this risk increases as local deployments of these systems flourish and people start connecting them to each other. Once an AI service is determining what data to share with other AIs, the human oversight element that currently makes that determination is lost.”

ExtraHop aims to protect against this risk. The Reveal(x) tool is incorporated within its existing network detection and response platform and helps by tracking all devices and users connected to OpenAI domains. By doing so, it can identify which employees are using AI services and how much data they’re sending to those domains. That enables security teams to assess the level of risk associated with each person’s use of generative AI.

Reveal(x) uses network packets as its primary data source for monitoring and analysis of AI usage, enabling it to show exactly how much data is being sent to and received from OpenAI domains. That means security leaders can evaluate what falls into an acceptable range and what might indicate potential IP loss.

For instance, a user sending simple queries to ChatGPT would only be sending bytes or kilobytes of data. But if someone’s device starts sending megabytes of data, it indicates the employee could be sending proprietary data along with their query. In such cases, Reveal(x) will help identify the type of data being sent, as well as the individual files, so long as it isn’t encrypted.

Chris Kissel, an analyst with International Data Corp., said IP and customer data loss is one of the biggest risks involved with using AI-as-a-service tools. “ExtraHop is addressing this risk to the enterprise by giving customers a mechanism to audit compliance and help avoid the loss of IP,” he said. “With its strong background in network intelligence, ExtraHop can provide unparalleled visibility into the flow of data related to generative AI.”

THANK YOU