AI

AI

AI

AI

AI

AI

Pushing the envelope for generative artificial intelligence model training and deployment workflows, Nvidia Corp. today said that it’s bringing an AI foundry to Microsoft Corp.’s Azure cloud that will allow any customer to roll their own chatbot, predictive or image-generating AI.

The company announced the offering during Microsoft’s Ignite 2023 conference today, calling it a generative AI factory provided by Nvidia and hosted on Azure that brings together the company’s best-in-breed AI enterprise infrastructure and tools for developer productivity.

“This entire end-to-end workflow with all of its pieces, all of the infrastructure and software, are now available on Microsoft Azure,” said Manuvir Das, vice president of enterprise computing at Nvidia. “This means that any customer can come into Azure and procure the required components from Azure marketplace.”

Check out our full coverage of Microsoft Ignite in these stories:

- First dedicated AI accelerators and in-house Arm processors unveiled for Microsoft Azure

- Microsoft turbocharges AI development with Fabric, Azure AI Studio and database updates

- AI-powered Microsoft Copilot expands with new features and customization

- Broad collection of security products announced at Microsoft Ignite

- Microsoft debuts new AI tools for Windows developers and IT professionals

- Nvidia announces generative AI foundry on Azure and new foundational models

- Microsoft brings generative AI copilots to sales and service teams

- Exclusive: Informatica and Microsoft team on native data integration for Fabric analytics

The offering includes the DGX Cloud platform, which is a powerful cloud hardware offering that allows companies to provision and run on-demand AI workloads on cloud infrastructure, now available through the Azure Marketplace. Companies can spin up DGX Cloud instances with access to eight A100 80-gigabyte graphical processing units capable of multinode scale, providing extreme power for AI processes such as training and fine-tuning. DGX Cloud was previously available on Oracle Cloud.

Nvidia also announced that it plans to add even more computing power to Azure by bringing its newly announced H200 Tensor Core GPU on board next year to support even larger workloads. This new GPU model is purpose-built for the biggest AI needs, including LLMs and generative AI models. In comparison to previous generations, it brings 141 gigabytes of HBM3e memory, 1.8 times more, and is capable of 4.8 terabits per second of peak memory bandwidth, an increase of 1.4 times.

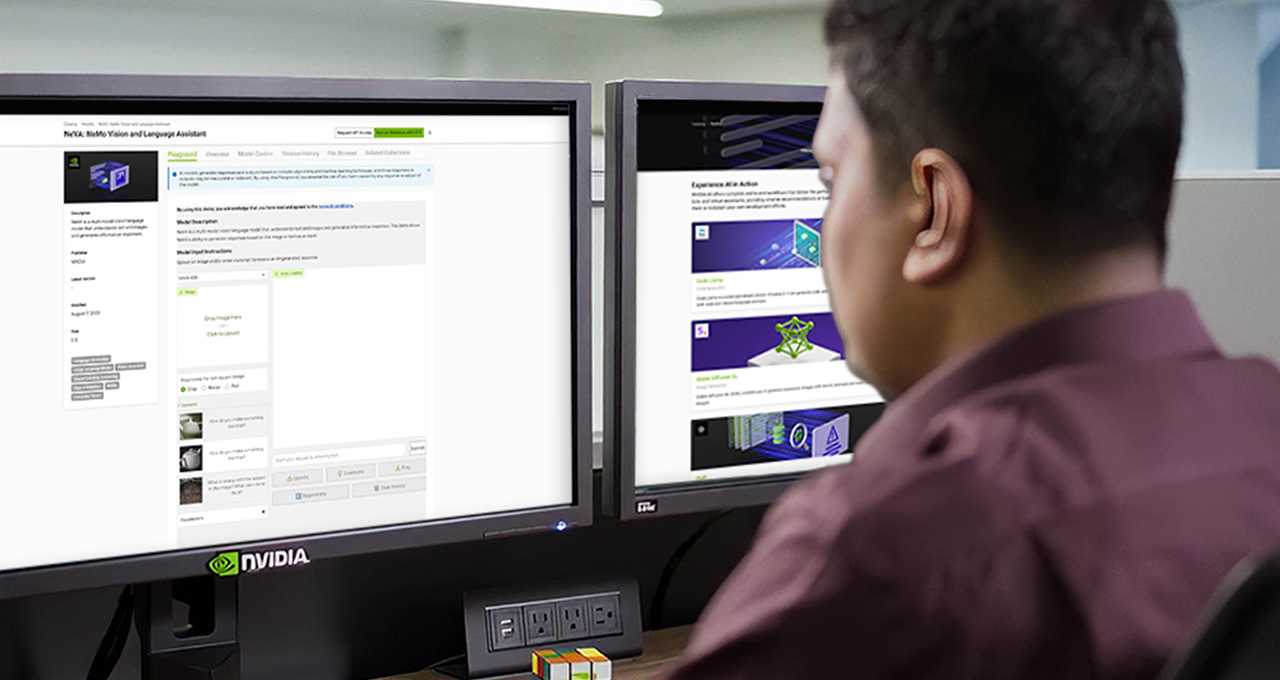

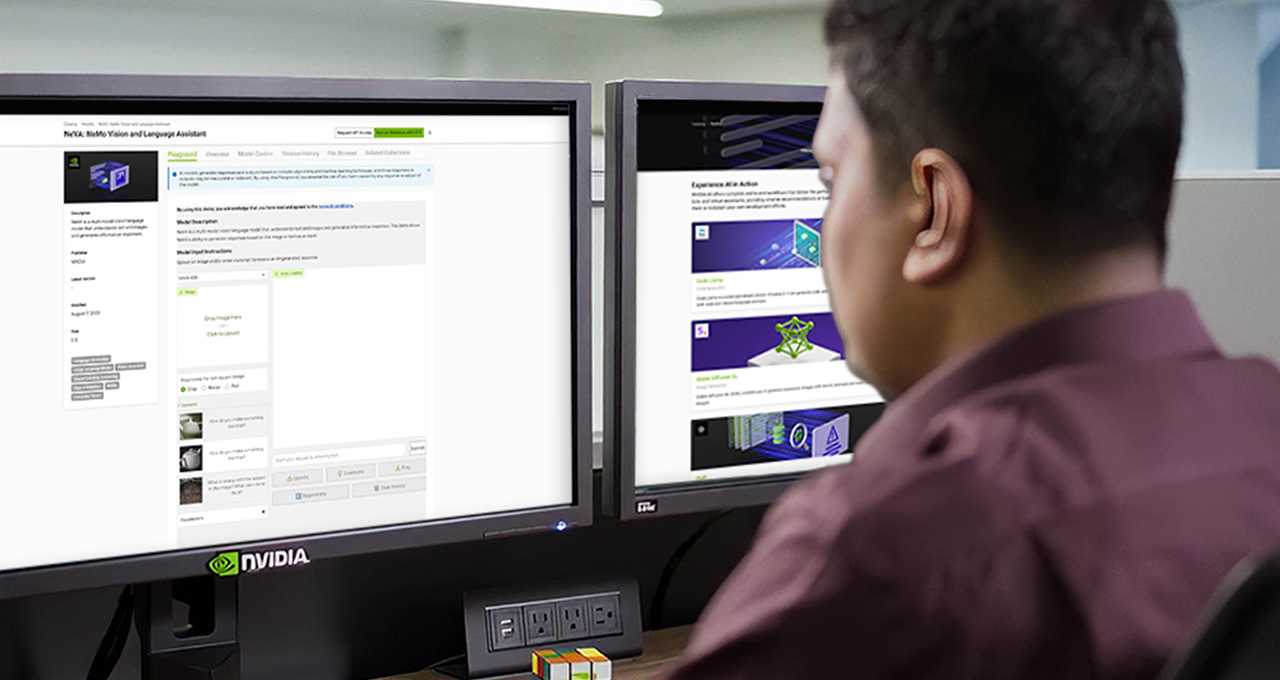

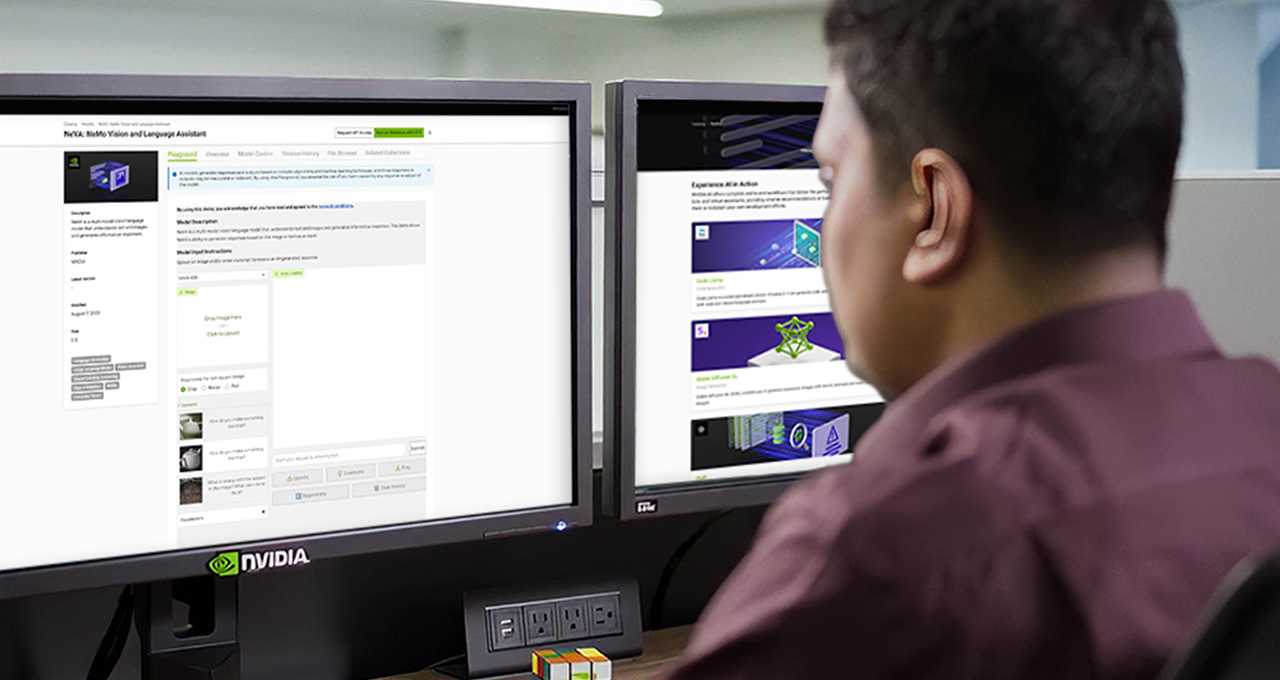

To support the acceleration of custom-built generative AI models by the industry, Nvidia announced the release of its own family of generative AI foundation models, called Nemotron-3 8B, and endpoints for optimized open-source models.

The Nemotron-3 8B family is a set of 8 billion parameter LLM models that are optimized to run on Nvidia hardware designed for industry customers looking to build secure, accurate generative AI applications. The models are multilingual and the company said that they were “trained on responsibly sourced datasets” and capable of performance comparable to much larger models for enterprise deployments.

Out of the box, the Nemotron-3 8B models are proficient in over 50 different languages, including English, German, Russian, Spanish, French, Japanese, Chinese, Korean, Italian and Dutch.

“The new Nvidia family of Nemotron-3 8B models are also included to support the creation of today’s most advanced enterprise chat and Q&A applications, for a broad range of industries including healthcare, telecommunications and financial services,” said Erik Pounds, senior director of AI software at Nvidia.

The company also provides a curated set of optimized models for users to access including common community-sourced models such as Meta Platform Inc.’s Llama 2, Paris-based startup Mistral AI’s Mistral and the image-generating model Stable Diffusion XL.

Foundation models are designed to be customized with proprietary data from enterprise customers to fine-tune them with their own data to specialize them to their own specific use cases. Once tailored to their specific needs they can be deployed virtually anywhere to be used in AI-based applications.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.