AI

AI

AI

AI

AI

AI

Nvidia Corp. today released a lightweight language model, Mistral-NeMo-Minitron 8B, that can outperform comparably sized neural networks across a range of tasks.

The code for the model is available on Hugging Face under an open-source license. Its debut comes a day after Microsoft Corp. rolled out several open-source language models of its own. Like Nvidia’s new algorithm, the new models are designed to run on devices with limited processing capacity.

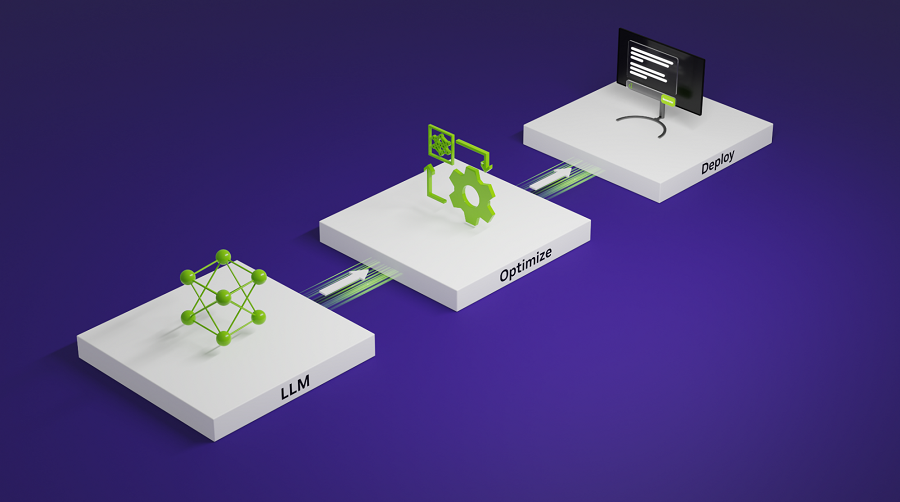

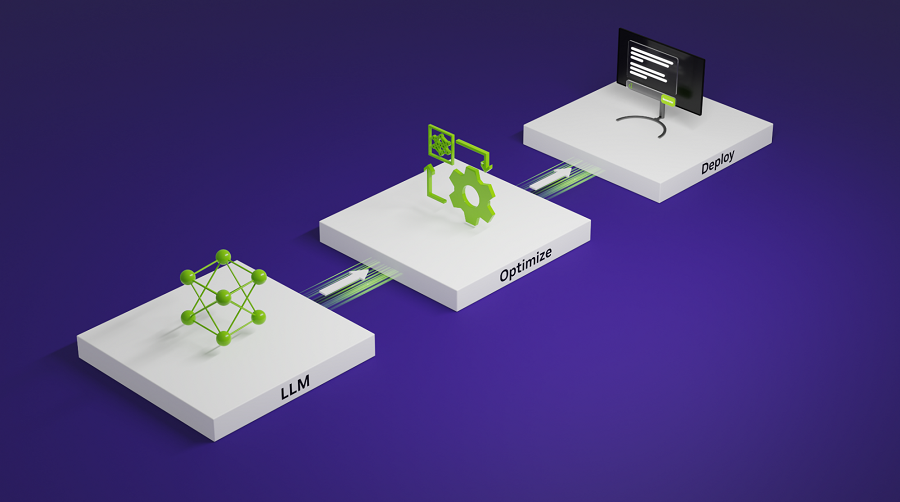

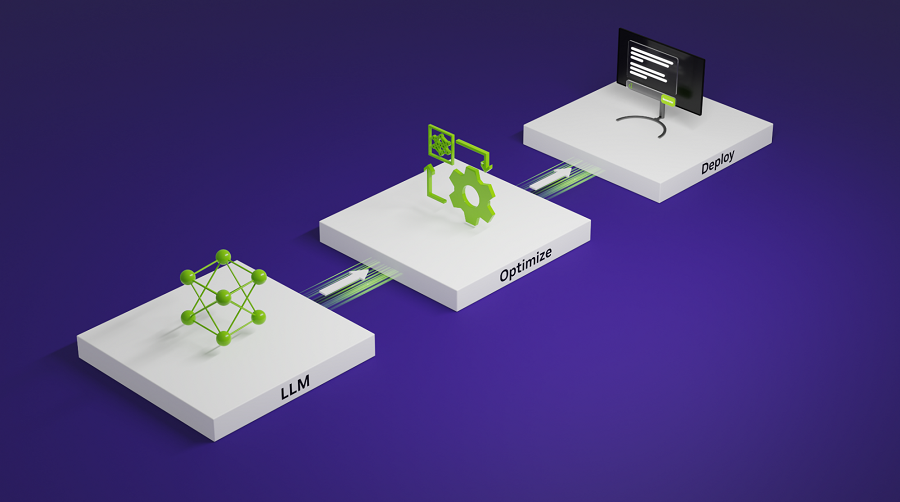

Mistral-NeMo-Minitron 8B is a scaled-down version of a language model called Mistral NeMo 12B that Nvidia debuted last month. The latter algorithm was developed through a collaboration with Mistral AI SAS, a heavily funded artificial intelligence startup. Nvidia created Mistral-NeMo-Minitron 8B using two machine learning techniques known as pruning and distillation.

Pruning is a way of reducing a model’s hardware requirements by removing unnecessary components from its code base. A neural network is made up of numerous artificial neurons, code snippets that each perform one relatively simple set of computations. Some of those code snippets play a less active role in processing user requests than others, which means they can be removed without significantly decreasing the AI’s output quality.

After pruning Mistral NeMo 12B, Nvidia moved on to the so-called distillation phase of the project. Distillation is a process whereby engineers transfer an AI’s knowledge to a second, more hardware-efficient neural network. In this case, the second model was the Mistral-NeMo-Minitron 8B that debuted today, which has 4 billion fewer parameters than the original.

Developers can also reduce an AI project’s hardware requirements by simply training a brand new model from scratch. Distillation offers several benefits over that approach, notably better AI output quality. Distilling a large model into a smaller one also costs less because the task doesn’t require as much training data.

According to Nvidia, its approach of combining pruning and distillation techniques during development significantly improved the efficiency of Mistral-NeMo-Minitron 8B. The new model “is small enough to run on an Nvidia RTX-powered workstation while still excelling across multiple benchmarks for AI-powered chatbots, virtual assistants, content generators and educational tools,” Nvidia executive Kari Briski detailed in a blog post.

The release of Mistral-NeMo-Minitron 8B comes a day after Microsoft open-sourced three language models of its own. Like Nvidia’s new algorithm, they were developed with hardware efficiency in mind.

The most compact model in the lineup is called Phi-3.5-mini-instruct. It features 3.8 billion parameters and can process prompts with up to 128,000 tokens’ worth of data, which allows it to ingest lengthy business documents. A benchmark test conducted by Microsoft determined that Phi-3.5-mini-instruct can perform some tasks better than Llama 3.1 8B and Mistral 7B, which feature about twice as many parameters.

Microsoft also open-sourced two other language models on Tuesday. The first, Phi-3.5-vision-instruct, is a version of Phi-3.5-mini-instruct that can perform image analysis tasks such as explaining a chart uploaded by the user. It rolled out alongside Phi-3.5-MoE-instruct, a significantly larger model that features 60.8 billion parameters. Only a 10th of those parameters activate when a user enters a prompt, which significantly reduces the amount of hardware necessary for inference.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.