AI

AI

AI

AI

AI

AI

Meta Platforms Inc.’s artificial intelligence research team has showcased a new family of generative AI models for media that can generate and edit videos from simple text prompts.

Though the models are still a work in progress, the company said they will provide the foundation of new video creation features set to appear in Facebook, Instagram and WhatsApp next year. The Meta Movie Gen models will enable users to create high-quality HD videos and images, edit those creations, generate audio and soundtracks, and even embed their own likeness within them, the company said.

In a blog post, Meta’s AI team explained that it’s aiming to usher in a new era of AI-generated content for creators on its platforms. The Meta Movie Gen models build on the company’s earlier work in generative AI content creation, which began with its “Make-A-Scene” models that debuted in 2022, enabling users to create simple images and audio tracks, and later videos and 3D animations. Meta’s later Llama Image foundation models expanded on this work, introducing higher-quality images and videos, as well as editing capabilities.

“Movie Gen is our third wave, combining all of these modalities and enabling further fine-grained control for the people who use the models in a way that’s never before been possible,” Meta’s AI team said in a blog post.

According to Meta, the Movie Gen collection is made up of four models that enable video generation, personalized video generation, precise video editing and audio generation.

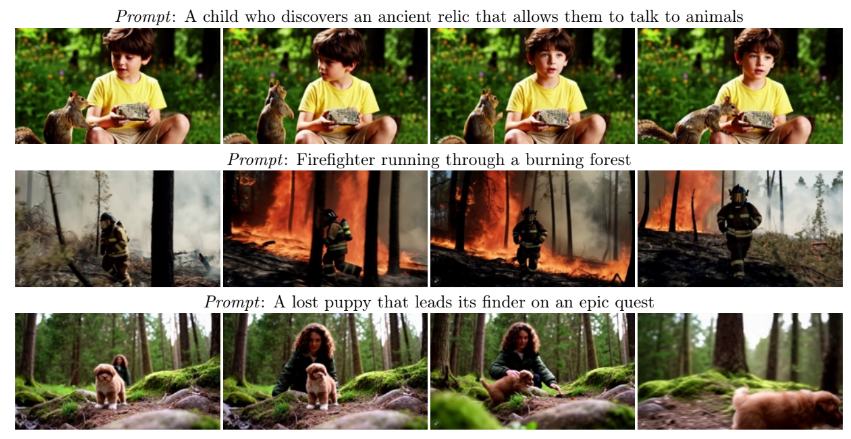

The first of the models, Video Generation, is a 30 billion-parameter transformer model that’s able to generate videos of up to 16 seconds in duration at 16 frames per second from prompts that can be simple text, images or a combination of the two. Meta explained that it’s built on a joint model architecture that’s optimized for both text-to-image and text-to-video, and features advanced capabilities such as the ability to reason about object motion, subject-object interactions and camera motion, so it can replicate more realistic motion in the videos it produces.

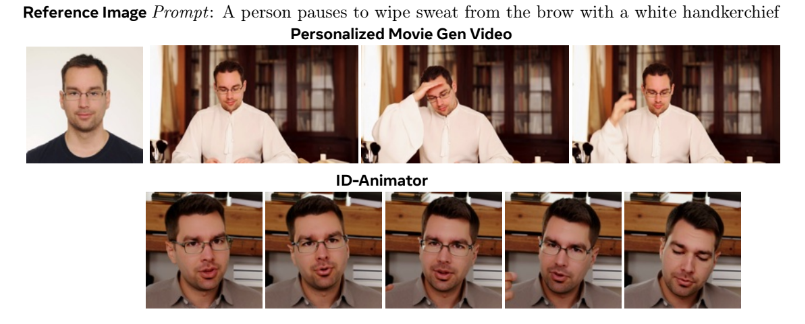

The Personalized Videos model is a bit different, as it’s specifically designed to take an image of the user and create videos starring them, based on the user’s text prompts.

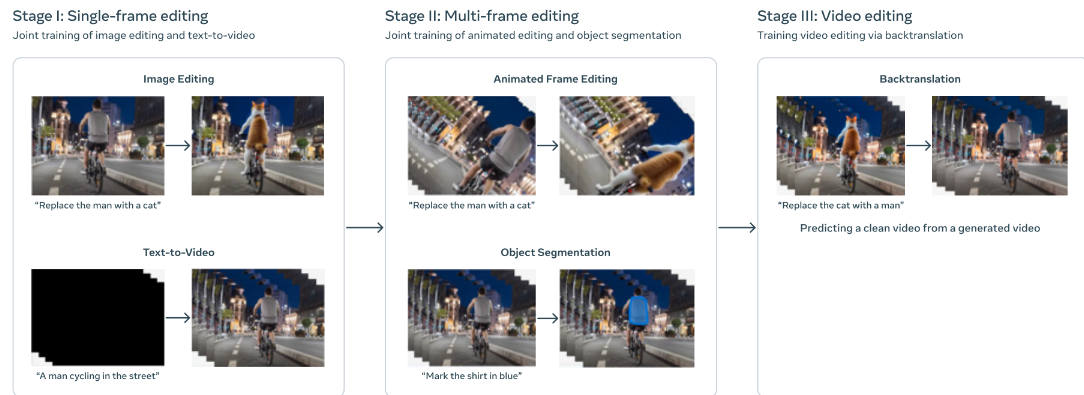

Meta explained that the same foundational transformer model was used as the basis of its Precise Video Editing model. To use it, the user simply uploads the video they want to edit, along with a text input that describes how they want it to be edited, and the model will do the rest.

It’s all about enabling more precision for creators, who can use it to add, remove or swap out specific elements of a video, such as the background, objects in the video, or style modifications, the company said. It does this while preserving the original video content, targeting only the relevant pixels.

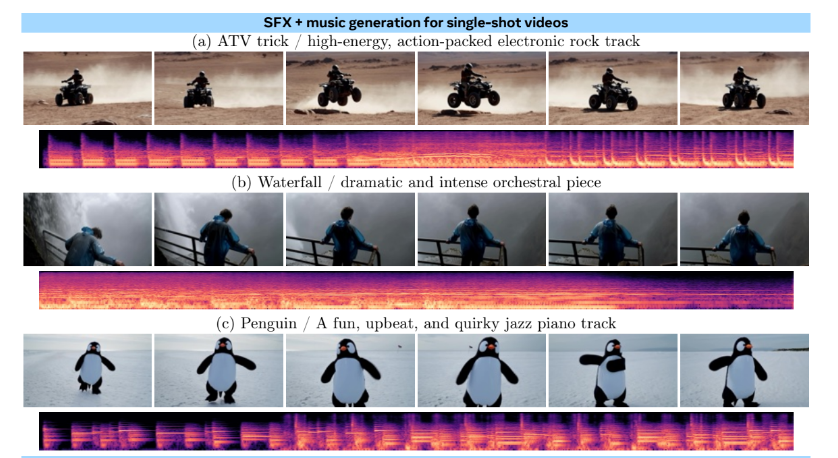

As for the Audio Generation tool, this is based on a 13 billion-parameter audio generation model that can take both video and text inputs to create high-fidelity soundtracks of up to 45 seconds. It’s able to generate ambient sound, sound effects and instrumental background music, Meta said, and synchronize this with the content in the video.

Meta hasn’t said anything about if or when it might make the Meta Movie Gen models available for others to use, but the company generally opts to open-source its AI innovations, such as its Llama models. So it’s likely that it won’t be long until developers will be able to start experimenting with them.

When they do launch, Meta Movie Gen will go head-to-head with a number of other video generation models, such as Runway AI Inc.’s Gen-3 Alpha Turbo, OpenAI’s upcoming Sora, Google DeepMind’s Veo, Adobe Inc.’s Firefly, Luma AI Inc.’s Dream Machine and Captions LLC’s video editing tools.

The company is confident it can compete with those rivals. It separately published a research paper for those who want a more exhaustive deep dive into the inner workings of the Meta Movie Gen models. In the paper, it claims a number of breakthroughs in model architecture, training objectives, data recipes, inference optimizations and evaluation protocols, and it believes these innovations enable Meta Movie Gen to significantly outperform its competitors.

That said, Meta concedes that there’s still a lot of room for improvement in its models, and it’s planning on making further optimizations to decrease inference time and improve the quality of the videos it generates.

Holger Mueller of Constellation Research Inc. said generative AI has already revolutionized the way people write text, create images, understand documents and fix code, and the industry is now turning to the harder task of video creation.

“Creating film and video is a slow and expensive process that costs lots of money,” Mueller said. “Meta is promising to give creators a faster and much more affordable alternative with Meta Movie Gen, and it could potentially democratize movie creation. If it does, it’ll likely send a few shockwaves across the traditional movie industry.”

Meta said the next steps involve working closely with filmmakers and other creators to integrate their feedback into the Meta Movie Gen models, with the goal being to come up with a finished product that’s ultimately destined to appear on platforms like Facebook and Instagram.

“Imagine animating a ‘day in the life’ video to share on Reels and editing it using text prompts, or creating a customized animated birthday greeting for a friend and sending it to them on WhatsApp,” the company said. “With creativity and self-expression taking charge, the possibilities are infinite.”

THANK YOU