EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

Nvidia Corp., whose graphics chips are the basis for many recent advances in artificial intelligence, Monday night unveiled new software to accelerate AI work for applications ranging from self-driving cars to speech and image recognition.

The company, which is opening one of its GPU Technology Conference Tuesday morning in Beijing with a keynote by Chief Executive Jensen Huang (pictured), also announced several deals with Chinese server makers and cloud computing providers that position its graphics processing units to dominate that market as much as it has the rest of the world.

The moves are aimed at keeping Nvidia, whose GPUs and associated software are the dominant means of training and running deep learning and other machine learning systems for the past few years, on top worldwide versus increasingly active rival makers of machine learning-oriented chips such as Intel Corp. and Google Inc.

“Other vendors are working aggressively to close the gap, but the gap is quite wide,” Chirag Dekate, Gartner Inc.’s research director for high-performance computing and machine learning, said in an interview.

First, Nvidia unveiled a new technology called TensorRT 3. It’s a so-called compiler and runtime engine that accelerates the running of AI algorithms in the cloud and on devices, a process known as “inferencing,” by as much as 40 times compared with standard central processing unit chips, depending on which Nvidia chips are used. This process is distinct from the process of “training” algorithms, or letting them learn to distinguish images, words or videos on their own instead of being explicitly programmed.

“The traditional approach to inferencing is not scaling,” said Paresh Kharya, Nvidia’s lead product manager for accelerated computing. For instance, he estimated that it would cost Chinese speech technology firm iFlyTek more than $1 billion worth of servers to serve its 500 million daily active users 15 minutes of speech each per day. There are similar challenges for Google, which translates 140 billion words per day, and LinkedIn, which processes 2 trillion messages a day.

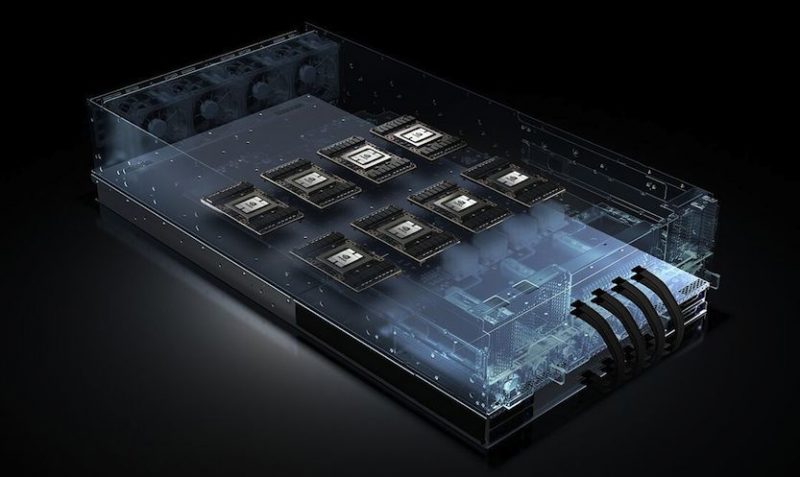

Nvidia HGX server design

One eight-GPU Nvidia HGX server, for instance, can do what 150 CPU-based servers can on speech recognition, he said. Nvidia uses several technologies to do this, among them the ability to handle multiple streams of processing so, for example, several sentences can be translated at once.

“This is an essential component of Nvidia’s edge-to-data center strategy,” Dekate said. “It further widens the gap between Nvidia and its competitors.”

Nvidia also announced that top Chinese server makers, including Huawei Technologies Co. Ltd., Inspur Systems Inc. and Lenovo Group Ltd. will use Nvidia’s AI design, the HGX reference architecture, in their systems for large “hyperscale” data centers. These are the same designs used in Facebook Inc.’s Big Basin systems, Microsoft’s Project Olympus initiative and Nvidia’s own DGX-1 AI supercomputers.

In addition, leading Chinese cloud providers, including Alibaba Cloud, Baidu Cloud and Tencent Cloud, will use Nvidia chips based on its Volta GPU design for their AI services. In particular, they’ll use Nvidia’s V100 data center GPU that is five times faster than the previous-generation, called Pascal. And Chinese e-commerce giant JD.com will use drones powered by Nvidia’s Jetson “supercomputer on a module” for deliveries of food and medicines.

China’s a key market, said Dekate. “Alibaba Cloud, Baidu and Tencent are some of the largest cloud ecosystems in the world,” he said. “With deep inroads into the Chinese market, Nvidia essentially has ensured that deep learning workloads, when run in public cloud ecosystems, will be running on Nvidia GPUs with some exceptions.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.