BIG DATA

BIG DATA

BIG DATA

BIG DATA

BIG DATA

BIG DATA

Looker Inc.’s business intelligence software can be used to deploy education resources more effectively in inner-city neighborhoods, identify hot spots of violent crime and pinpoint income inequalities in the workforce. It can also be used for racial profiling, political gerrymandering and identifying targets for terrorist attacks. Is it Looker’s job to choose?

Chief Executive Frank Bien believes it is, at least to some extent. He’s promoting an agenda he calls “Data for Good and not Evil,” and he’s willing to draw a line in the sand. Looker won’t do business with any company that would use its software to discriminate against protected classes or to manipulate election results.

The company is also engaging in an ongoing dialogue with employees to better define both appropriate and inappropriate use cases for its software. “There will be hard decisions and sales that we may need to bow out of,” Bien said in an interview. “Employees want this clarity, and I think our first obligation is there.”

Bien is exhorting his colleagues in the big data business to do the same. “We firmly believe that data science professionals and industry groups need to define this issue,” he said. “Tech vendors are going to have some hard decisions to make about how their products are used.”

The CEO isn’t alone in calling for big data companies to take a more active role in data responsibility. During Salesforce.com Inc.’s most recent earnings call with analysts, CEO Marc Benioff called for stricter government regulations on use of private data by analytics firms and tool makers. “We need a national privacy law in the U.S. that looks a lot like GDPR,” he said, referring to the new European General Data Privacy Regulation.

Hadoop inventor Cutting doesn’t want a “dystopian future to come to be.” (Photo: Twitter)

Doug Cutting, creator of the seminal Hadoop software for storing and processing massive amounts of data and chief architect at big data firm Cloudera Inc., has been urging his contemporaries for years, in a presentation he calls “Pax Data,” to take a more activist stance on data use.

Interest in the topic has been rekindled, he said, in the wake of controversies Cambridge Analytica scandal and recent protests by Google Inc. employees over the company’s sale of technology to the Pentagon that could be used to identify individuals in surveillance videos. Google felt forced on Thursday to issue an eight-point set of guidelines, including a pledge this week not to weaponize the use of artificial intelligence in these kinds of situations.

The more data people give up about themselves, the greater the potential for abuse. “If you look at most science fiction novels, the people who collect data are the bad guys,” Cutting said. “I don’t want this dystopian future to come to be.”

Most Americans would probably agree that their country hasn’t been this politically polarized since the Vietnam War, but big data has injected a new and potentially virulent factor into the equation. The same software that can detect abuses like racial profiling can also be used to enable them. That has big data companies on the horns of a dilemma: Should they take an active role in denying access to public opinion manipulators and hate-mongers? Or should they regard themselves as humble — and silent — tool makers?

And if they are tool makers, are those tools closer in function to guns or hammers?

SiliconANGLE contacted some of the largest providers of big data software, as well as organizations that specialize in gathering and analyzing data, to get a sampling of opinion. Many declined to be interviewed, not surprisingly. Among those who would speak on the record, none currently has a firm policy regulating the use of its products beyond those that are patently illegal. Nearly all acknowledge, however, that social responsibility has become an increasingly common topic of discussion internally, and that pressure from politically minded employees is spurring them to monitor the use of their products more carefully.

“The big data movement right now is at an ethical crossroads,” said Lydia Clougherty-Jones, a research director in the data and analytics group at Gartner Inc. “How do you ethically use the data for value – such as improving efficiency and detecting fraud – in a way that also pays attention to ethical responsibilities?”

The experience of Neo4j Inc. illustrates the fine line these companies must walk. The company’s namesake graph database technology enables users to discover relationships between data elements that aren’t obvious on the surface. That’s made it a favorite of investigative journalists, who use graph technology to peer into the byzantine webs of hidden connections that characterize such areas as campaign financing and money laundering.

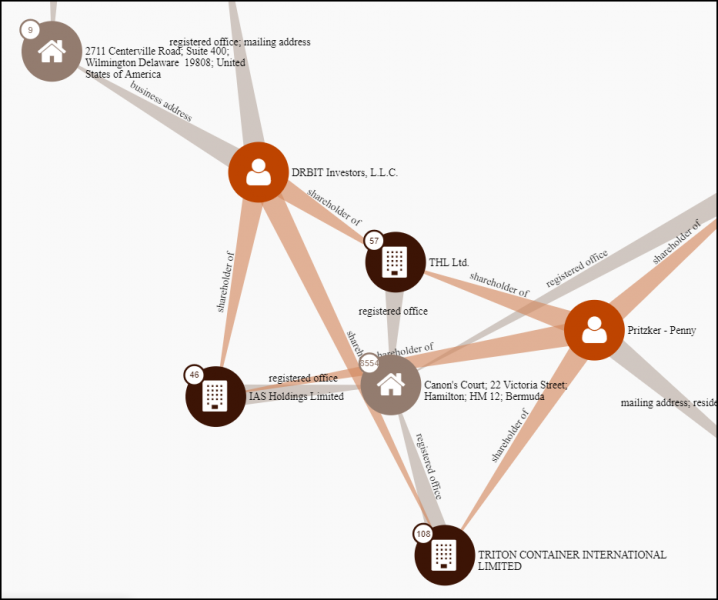

Sampling of former U.S. Commerce Secretary Penny Pritzker’s

offshore connections (Source: Paradise Papers)

Neo4j donated its technology to the International Consortium of Investigative Journalists for use in researching the Paradise Papers, a series of 2017 reports on the ways in which companies and wealthy individuals use offshore havens to avoid paying U.S. taxes. It has also participated in a project to unwind the factors that influenced the 2016 U.S. election and sponsored a journalism internship. And it assigns some members of its developer relations teams to assist journalists in applying its technology to their craft.

“We’re all very motivated when we see that the product is used for good,” said Philip Rathle, Neo4j’s vice president of products.

However, the company’s customers also include controversial agrichemical giant Monsanto Co., several unnamed government agencies and makers of drones for use in surveillance. “We don’t publicize them that much,” Rathle acknowledged, but the company reasons that the good that can come from its government relationships exceeds any potential for misuse.

Like many of the companies that were interviewed, Neo4j leaves it up to employees to determine their own comfort levels and doesn’t penalize people for opting out of projects or working with customers whose activities offend them. “No one should ever be forced to engage with an organization that makes them uncomfortable,” Rathle said.

But, like most of its peers, Neo4j has declined to create a formal policy. The problem isn’t the obvious cases like white supremacist groups and terrorist organizations, but the vast gray areas in the middle. “I love the concept of saying we want to make sure the tools are used for good, but I wouldn’t want to stand on the rooftops and say there’s a magic way to do that,” said Mike Tuchen, CEO of Talend SA.

For example, gerrymandering — the practice of rearranging boundaries and electoral districts to favor candidates of a certain party – isn’t illegal unless a court finds a particular arrangement so, but many people find it repugnant. And though executives’ political opinions may bias them against certain liberal or conservative interest groups, that isn’t a reason not to do business with them.

“The definition of appropriate is so vague that it would be difficult to enforce,” said Gartner’s Clougherty-Jones. “Data isn’t proprietary like a trademark, so creating a contract that restricts downstream use is challenging.”

Looker’s Bien: No excuse for inaction. (Photo: Looker)

However, arguing that data responsibility is too slippery a slope to tread upon ducks the hard questions that big data firms need to answer, said Looker’s Bien. “It can be a justification for doing nothing,” he said. “There are issues we want to stay away from, but that doesn’t mean there’s nothing for us to do.”

At the moment, informal policies predominate. “The test that I use personally is that if any of the use cases were reviewed by our customers, would we be proud of what they did?” said Jack Norris, senior vice president of data and applications at MapR Technologies Inc. “We don’t have a policy, but that’s kind of our zeitgeist.”

And some software companies have so little visibility into how their products that monitoring customer behavior is all but impossible. For example, Talend distributes its integration software through multiple channels and also provides a freely downloadable open source version. “Trying to make value judgments worldwide when you sell through channels isn’t really practical,” Tuchen said.

Data integration startup Dremio Corp. also distributes a version of its analytics platform under an open-source license. “You may have users in a country that you don’t want to do business with, but in open source, you don’t have visibility into those things,” said Chief Marketing Officer Kelly Stirman.

On the other extreme is companies such as Looker and Hortonworks Inc., which have extensive professional services operations that should permit them to see how their software is being used in detail. Looker doesn’t have a written policy on appropriate data use, but it employs a compliance team to perform regular assessments to ensure that “use of any data is transparent, safe and respectful,” according to its website. Hortonworks didn’t respond to interview requests.

Absent hard guidelines, trust and transparency may have to suffice. “I’ve been trying to encourage the industries that collect data to build more trust with their customers,” Cutting said. “They can do that by providing audits periodically to prove that they are doing is what they say they’re doing.”

Executives at tools vendors also point the finger at data analytics firms as being more appropriate candidates to regulate data use. For example, IBM Corp. has no policies about how its extensive line of database and analytics tools may be used, but says it expects customers to do the right thing. The company has guidelines for how it expects its customers to treat personal information, but ultimately leaves the decision in customer hands.

Saying customers should behave responsibly and checking to make sure they do are different things, as Facebook found out when Cambridge Analytica violated the social networks’ terms of use. In the burgeoning world of professional data analytics services, there are essentially no rules.

MariaDB’s Howard: Beware the data mercenaries. (Photo: LinkedIn)

“No one knows what Palantir is doing,” said MariaDB Corp. CEO Michael Howard, referring to Palantir Technologies Inc., the secretive firm that conducts complex big-data projects for many government agencies and corporations.

The severe shortage of data scientists has forced many organizations to turn to boutique firms for machine learning and artificial intelligence expertise without knowing — or perhaps caring — how the data they share with those contractors is used. Howard calls these firms “uncontrolled data mercenaries. They aren’t selling firearms. They’re armies, platoons and SEAL operations,” he said.

Data analytics firms are understandably reluctant to talk about the issue, and none of the half-dozen SiliconANGLE contacted would speak on the record. The Cambridge Analytica case illustrates the problem, however. The company gained access to Facebook data through a third party and then sold it without permission, despite Facebook’s published prohibitions against doing so.

Companies such as Segment Inc. and Zeta Interactive Corp. gather data on hundreds of millions of consumers and perform customized analytics on behalf of customers for use in product development and marketing. No one has accused either of those companies of wrongdoing. But the sphere in which they operate has few rules, and both companies’ privacy policies leave responsibility for protection of the data they collect to individuals and client companies.

GDPR could be a turning point. The law imposes strict rules on how data about European Union citizens may be gathered, used and maintained, with onerous penalties for violators. Australia has adopted similar regulations and Canada is considering them. Although no one expects the U. S. to adopt similar regulations in the current political climate, the pressure on government to do something grows with each prominent data breach.

Big-data executives differ on whether that would be a good thing. “GDPR could be a game-changer,” said Looker’s Bien. “We have to be more clear about what are appropriate and inappropriate uses of data around such things as elections, for example. GDPR is a great opportunity.”

However, large-scale government regulation is an option few executives want to contemplate. Most would rather see the industry regulate itself as the Financial Industry Regulatory Authority Inc. does for investment professionals and the American Bar Association does for lawyers.

Self-regulation “is in our business interests long-term because it will permit the industry to flourish,” Cutting said. “If consumers reject — or governments outlaw — certain kinds of data collection, then you’re going to disable progress.”

In the absence of self-imposed rules, transparency is most likely immediate alternative, but that doesn’t need to be a bad thing. In fact, IBM research conducted on the eve of GDPR implementation found that compliance executives had turned positive on the regulations, believing that increased accountability and attention to data stewardship would improve customer relationships.

“If individuals trust an organization, they’re more likely to share their data,” said MapR’s Norris. “With trust comes the ability to do some interesting things.”

With trust in place across businesses and consumers, it may not matter whether big-data tools look like guns or like hammers. In the end, both can be beaten into plowshares.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.